import numpy as np

import matplotlib.pyplot as plt

from IPython.display import clear_output

%matplotlib inline

from sklearn.model_selection import train_test_split

rng = np.random.default_rng()

def logistic(x):

return 1.0/(1.0 + np.exp(-x))Multi-layer Perceptron

The goal of this exercise is to implement a shallow multi-layer perceptron to perform non-linear classification. Let’s start with usual imports, including the logistic function.

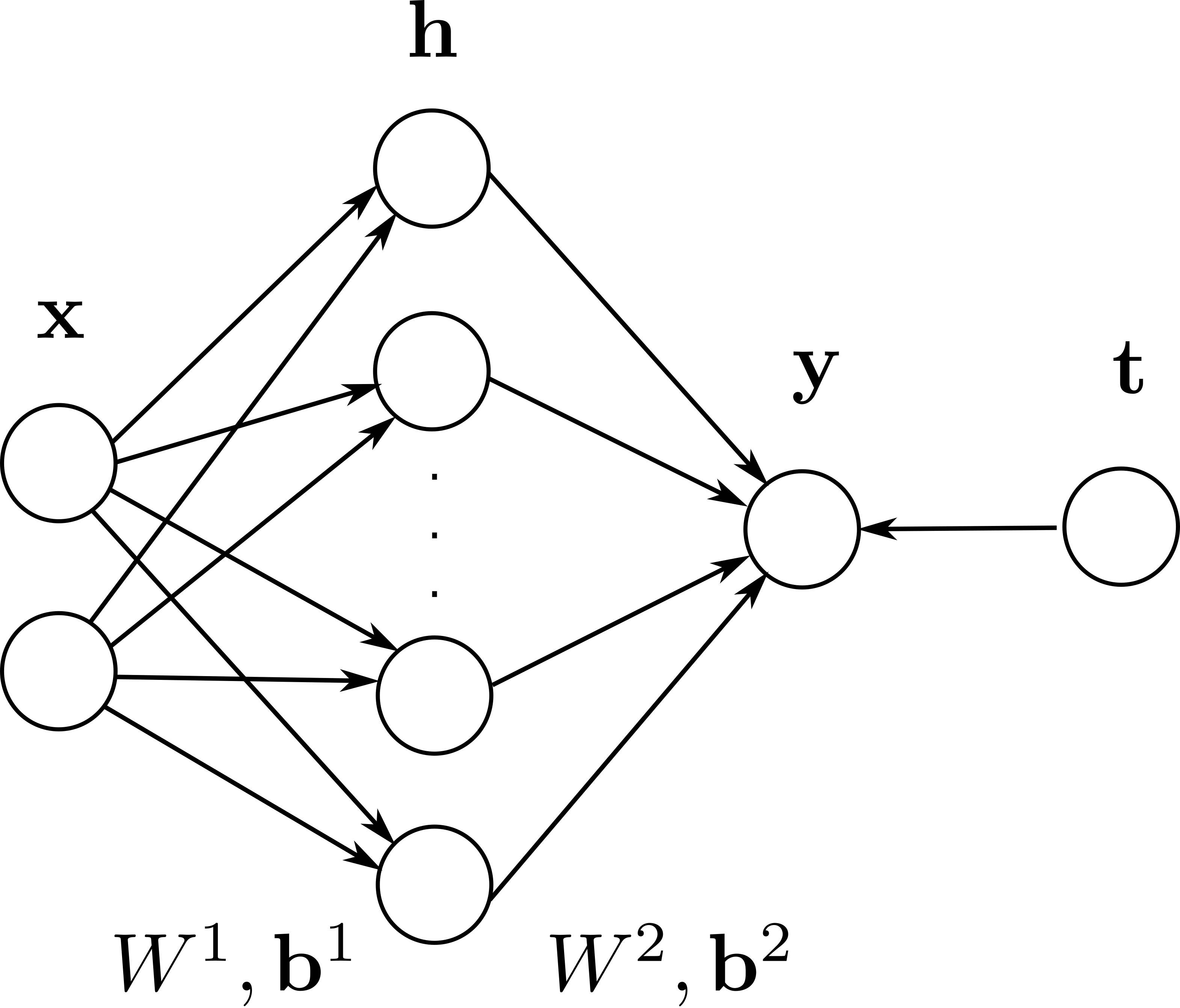

Structure of the MLP

In this exercise, we will consider a MLP for non-linear binary classification composed of 2 input neurons \mathbf{x}, one output neuron y and K hidden neurons in a single hidden layer (\mathbf{h}).

The output neuron is a vector \mathbf{y} with one element that sums its inputs with K weights W^2 and a bias \mathbf{b}^2.

\mathbf{y} = \sigma( W^2 \times \mathbf{h} + \mathbf{b}^2)

It uses the logistic transfer function:

\sigma(x) = \dfrac{1}{1 + \exp -x}

As in logistic regression for linear classification, we will interpret y as the probability that the input \mathbf{x} belongs to the positive class.

W^2 is a 1 \times K matrix (we could interpret it as a vector, but this will make the computations easier) and \mathbf{b}^2 is a vector with one element.

Each of the K hidden neurons receives 2 weights from the input layer, what gives a K \times 2 weight matrix W^1, and K biases in the vector \mathbf{b}^1. They will also use the logistic activation function at first:

\mathbf{h} = \sigma(W^1 \times \mathbf{x} + \mathbf{b}^1)

The goal is to implement the backpropagation algorithm by comparing the desired output t with the prediction y:

- The output error is a vector with one element:

\delta = (\mathbf{t} - \mathbf{y})

- The backpropagated error is a vector with K elements:

\delta_\text{hidden} = \sigma'(W^1 \times \mathbf{x} + \mathbf{b}^1) \, W_2^T \times \delta

(W^2 is a 1 \times K matrix, so W_2^T \times \delta is a K \times 1 vector. The vector \sigma'(W^1 \times \mathbf{x} + \mathbf{b}^1) is multiplied element-wise.)

- Parameter updates follow the delta learning rule:

\Delta W^1 = \eta \, \delta_\text{hidden} \times \mathbf{x}^T

\Delta \mathbf{b}^1 = \eta \, \delta_\text{hidden}

\Delta W^2 = \eta \, \delta \, \mathbf{h}^T

\Delta \mathbf{b}^2 = \eta \, \delta

Notice the transpose operators to obtain the correct shapes. You will remember that the derivative of the logistic function is given by:

\sigma'(x)= \sigma(x) \, (1- \sigma(x))

Q: Why do not we use the derivative of the transfer function of the output neuron when computing the output error \delta?

A: As in logistic regression, we will interpret the output of the network as the probability of belonging to the positive class. We therefore use (implicitly) the cross-entropy / negative log-likelihood loss function, whose gradient does not include the derivative of the logistic function.

Data

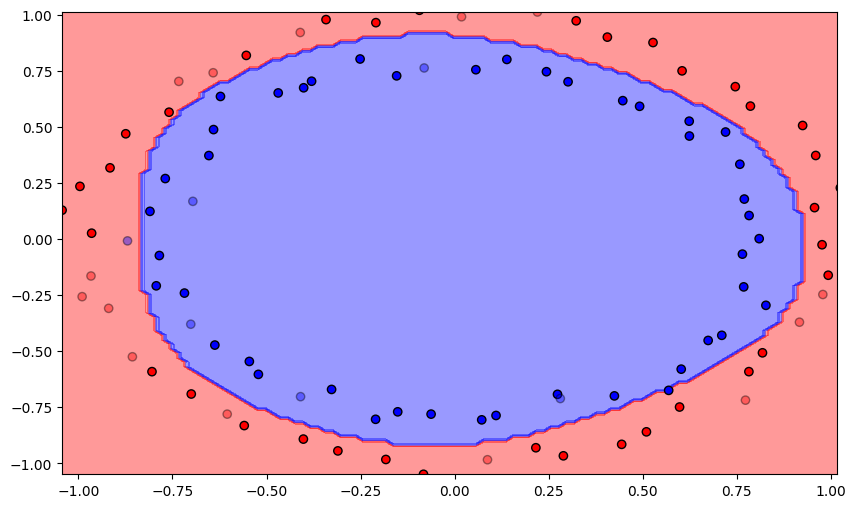

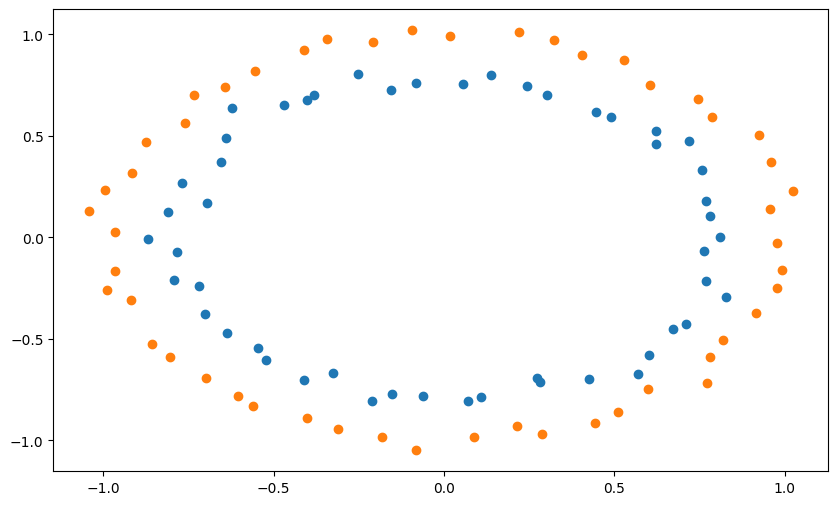

The MLP will be trained on a non-linear dataset with samples of each class forming a circle. Each sample has two input dimensions. In the cell below, blue points represent the positive class (t=1), orange ones the negative class (t=0).

from sklearn.datasets import make_circles

N = 100

d = 2

X, t = make_circles(n_samples=N, noise = 0.03, random_state=42)

plt.figure(figsize=(10, 6))

plt.scatter(X[t==1, 0], X[t==1, 1])

plt.scatter(X[t==0, 0], X[t==0, 1])

plt.show()

Q: Split the data into a training and test set (80/20). Make sure to call them X_train, X_test, t_train, t_test.

X_train, X_test, t_train, t_test = train_test_split(X, t, test_size=.2, random_state=42)Class definition

The neural network is entirely defined by its parameters, i.e. the weight matrices and bias vectors, as well the transfer function of the hidden neurons. In order to make your code more reusable, the MLP will be implemented as a Python class. The following cell defines the class, but we will explain it step by step afterwards.

class MLP:

def __init__(self, d, K, activation_function, max_val, eta):

self.d = d

self.K = K

self.activation_function = activation_function

self.eta = eta

self.W1 = rng.uniform(-max_val, max_val, (K, d))

self.b1 = rng.uniform(-max_val, max_val, (K, 1))

self.W2 = rng.uniform(-max_val, max_val, (1, K))

self.b2 = rng.uniform(-max_val, max_val, (1, 1))

def feedforward(self, x):

# Make sure x has 2 rows

x = np.array(x).reshape((self.d, -1))

# Hidden layer

self.h = self.activation_function(np.dot(self.W1, x) + self.b1)

# Output layer

self.y = logistic(np.dot(self.W2, self.h) + self.b2)

def train(self, X_train, t_train, nb_epochs, visualize=True):

errors = []

for epoch in range(nb_epochs):

nb_errors = 0

# Epoch

for i in range(X_train.shape[0]):

# Feedforward pass: sets self.h and self.y

self.feedforward(X_train[i, :])

# Backpropagation

self.backprop(X_train[i, :], t_train[i])

# Predict the class:

if self.y[0, 0] > 0.5:

c = 1

else:

c = 0

# Count the number of misclassifications

if t_train[i] != c:

nb_errors += 1

# Compute the error rate

errors.append(nb_errors/X_train.shape[0])

# Plot the decision function every 10 epochs

if epoch % 10 == 0 and visualize:

self.plot_classification()

# Stop when the error rate is 0

if nb_errors == 0:

if visualize:

self.plot_classification()

break

return errors, epoch+1

def backprop(self, x, t):

# Make sure x has 2 rows

x = np.array(x).reshape((self.d, -1))

# TODO: implement backpropagation

def test(self, X_test, t_test):

nb_errors = 0

for i in range(X_test.shape[0]):

# Feedforward pass

self.feedforward(X_test[i, :])

# Predict the class:

if self.y[0, 0] > 0.5:

c = 1

else:

c = 0

# Count the number of misclassifications

if t_test[i] != c:

nb_errors += 1

return nb_errors/X_test.shape[0]

def plot_classification(self):

# Allow redrawing

clear_output(wait=True)

x_min, x_max = X_train[:, 0].min(), X_train[:, 0].max()

y_min, y_max = X_train[:, 1].min(), X_train[:, 1].max()

xx, yy = np.meshgrid(np.arange(x_min, x_max, .02), np.arange(y_min, y_max, .02))

x1 = xx.ravel()

x2 = yy.ravel()

x = np.array([[x1[i], x2[i]] for i in range(x1.shape[0])])

self.feedforward(x.T)

Z = self.y.copy()

Z[Z>0.5] = 1

Z[Z<=0.5] = 0

from matplotlib.colors import ListedColormap

cm_bright = ListedColormap(['#FF0000', '#0000FF'])

fig = plt.figure(figsize=(10, 6))

plt.contourf(xx, yy, Z.reshape(xx.shape), cmap=cm_bright, alpha=.4)

plt.scatter(X_train[:, 0], X_train[:, 1], c=t_train, cmap=cm_bright, edgecolors='k')

plt.scatter(X_test[:, 0], X_test[:, 1], c=t_test, cmap=cm_bright, alpha=0.4, edgecolors='k')

plt.xlim(xx.min(), xx.max())

plt.ylim(yy.min(), yy.max())

plt.show() The constructor __init__ of the class accepts several arguments:

dis the number inputs, here 2.Kis the number of hidden neurons.activation_functionis the function to use for the hidden neurons, for example thelogisticfunction defined at the beginning of the notebook. Note that the name of the method can be stored as a variable.max_valis the maximum value used to initialize the weight matrices.etais the learning rate.

The constructor starts by saving these arguments as attributes, so that they can be used in other method as self.K:

def __init__(self, d, K, activation_function, max_val, eta):

self.d = d

self.K = K

self.activation_function = activation_function

self.eta = etaThe constructor then initializes randomly the weight matrices and bias vectors, uniformly between -max_val and max_val.

self.W1 = rng.uniform(-max_val, max_val, (K, d))

self.b1 = rng.uniform(-max_val, max_val, (K, 1))

self.W2 = rng.uniform(-max_val, max_val, (1, K))

self.b2 = rng.uniform(-max_val, max_val, (1, 1))You can then already create the MLP object and observe how the parameters are initialized:

mlp = MLP(d=2, K=15, activation_function=logistic, max_val=1.0, eta=0.05)Q: Create the object and print the weight matrices and bias vectors.

# Parameters

K = 15

max_val = 1.0

eta = 0.05

# Create the MLP

mlp = MLP(d, K, logistic, max_val, eta)

print(mlp.W1)

print(mlp.b1)

print(mlp.W2)

print(mlp.b2)[[-0.05976336 -0.08497881]

[ 0.3609705 0.32223523]

[ 0.36730564 -0.55654717]

[-0.20719753 0.35368985]

[ 0.74033524 0.34669996]

[-0.03313026 0.31597552]

[-0.78592444 0.19381916]

[ 0.26311789 -0.14376931]

[-0.10836461 -0.65688825]

[ 0.93464305 0.02814398]

[ 0.17003232 0.09866934]

[ 0.14558728 -0.67342277]

[ 0.09599103 -0.56060259]

[ 0.71031056 0.16669883]

[-0.43146885 -0.40314978]]

[[-0.05576069]

[ 0.64533978]

[-0.62514709]

[-0.00349511]

[-0.66535888]

[ 0.9176304 ]

[ 0.56057713]

[-0.34782236]

[ 0.09243376]

[ 0.48444012]

[ 0.61388272]

[ 0.45749073]

[ 0.83108324]

[ 0.96957611]

[ 0.03765266]]

[[-0.69548795 -0.74384074 0.73544427 -0.43764399 0.92303608 -0.47316183

0.23095071 -0.47053184 -0.01537147 -0.52445533 -0.99513169 0.66231794

-0.95377207 -0.35165498 0.61658371]]

[[0.48396291]]The feedforward method takes a vector x as input, reshapes it to make sure it has two rows, and computes the hidden activation \mathbf{h} and the prediction \mathbf{y}.

def feedforward(self, x):

# Make sure x has 2 rows

x = np.array(x).reshape((self.d, -1))

# Hidden layer

self.h = self.activation_function(np.dot(self.W1, x) + self.b1)

# Output layer

self.y = logistic(np.dot(self.W2, self.h) + self.b2) Notice the use of self. to access attributes, as well as the use of np.dot() to mulitply vectors and matrices.

Q: Using the randomly initialized weights, apply the feedforward() method to an input vector (for example [0.5, 0.5]) and print h and y. What is the predicted class of the example?

x = np.array([0.5, 0.5])

mlp.feedforward(x)

print(mlp.h)

print(mlp.y)

if mlp.y[0, 0] > 0.5:

print("positive class")

else:

print("negative class")[[0.46801081]

[0.72848361]

[0.32744411]

[0.51743069]

[0.46957731]

[0.74250954]

[0.56574819]

[0.42845731]

[0.4279567 ]

[0.72428827]

[0.67879368]

[0.5482427 ]

[0.64537656]

[0.80346306]

[0.40620971]]

[[0.15390313]]

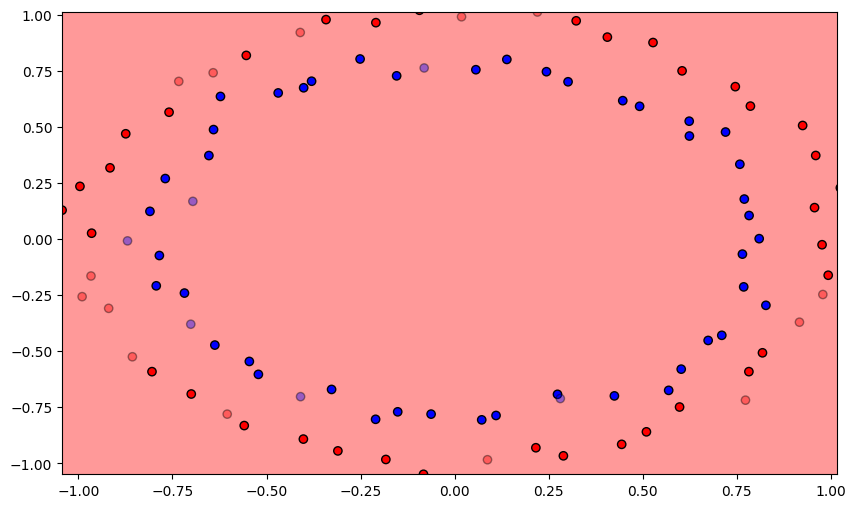

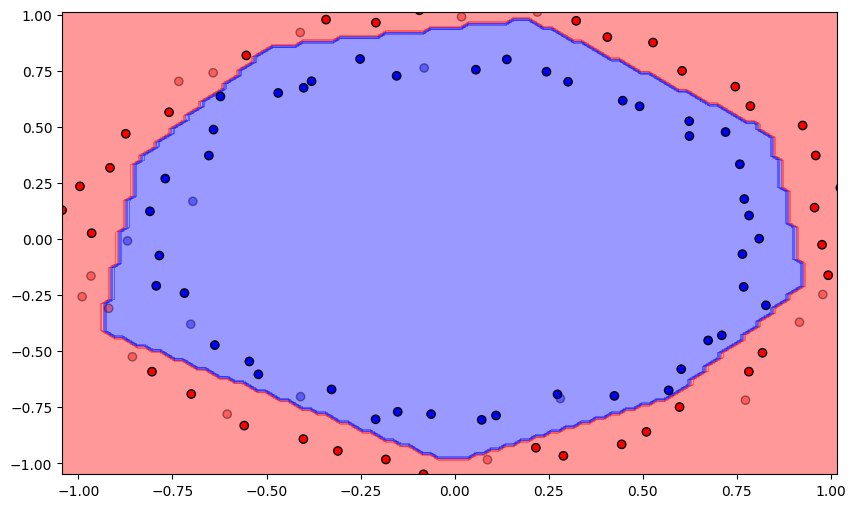

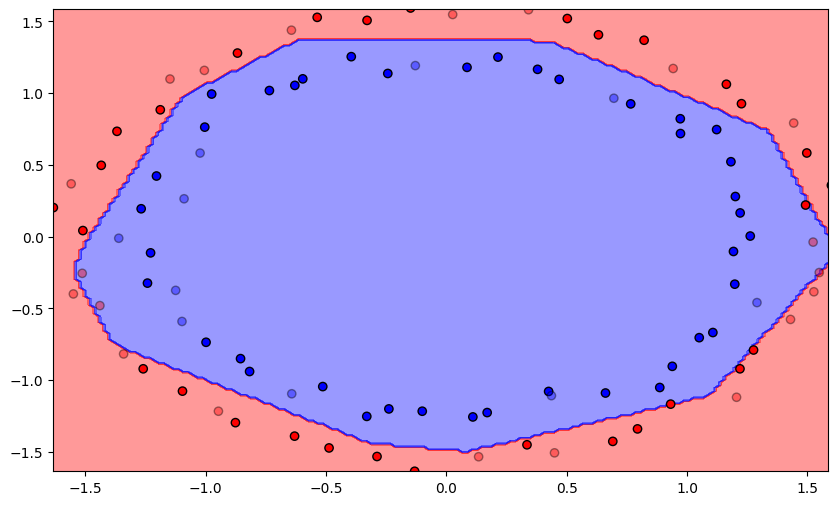

negative classThe class also provides a visualization method. It is not import to understand the code for the exercise, so you can safely skip it. It displays the training data as plain points, the test data as semi-transparent points and displays the decision function as a background color (all points in the blue region will be classified as negative examples).

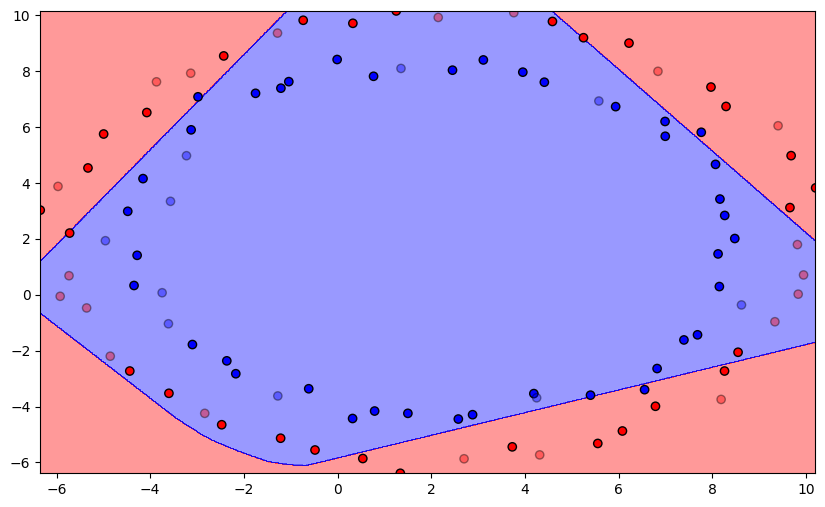

Q: Plot the initial classification on the dataset with random weights. Is there a need for learning? Reinitialize the weights and biases multiple times. What do you observe?

mlp = MLP(d, K, logistic, max_val, eta)

mlp.plot_classification()

A: The output neurons answers the same class for the whole input space, or is linear at best depending on initialization. Let’s learn!

Backpropagation

The train() method implements the training loop you have already implemented several times: several epochs over the training set, making a prediction for each input and modifying the parameters according to the prediction error:

def train(self, X_train, t_train, nb_epochs, visualize=True):

errors = []

for epoch in range(nb_epochs):

nb_errors = 0

# Epoch

for i in range(X_train.shape[0]):

# Feedforward pass: sets self.h and self.y

self.feedforward(X_train[i, :])

# Backpropagation

self.backprop(X_train[i, :], t_train[i])

# Predict the class:

if self.y[0, 0] > 0.5:

c = 1

else:

c = 0

# Count the number of misclassifications

if t_train[i] != c:

nb_errors += 1

# Compute the error rate

errors.append(nb_errors/X_train.shape[0])

# Plot the decision function every 10 epochs

if epoch % 10 == 0 and visualize:

self.plot_classification()

# Stop when the error rate is 0

if nb_errors == 0:

if visualize:

self.plot_classification()

break

return errors, epoch+1The training methods stops after nb_epochs epochs or when no error is made during the last epoch. The decision function is visualized every 10 epochs to better understand what is happening. The method returns a list containing the error rate after each epoch, as well as the number of epochs needed to reach an error rate of 0.

The only thing missing is the backprop(x, t) method, which currently does nothing:

def backprop(self, x, t):

# Make sure x has 2 rows

x = np.array(x).reshape((self.d, -1))

# TODO: implement backpropagationQ: Implement the online backpropagation algorithm.

All you have to do is to backpropagate the output error and adapt the parameters using the delta learning rule:

- compute the output error

delta. - compute the backpropagated error

delta_hidden. - increment the parameters

self.W1, self.b1, self.W2, self.b2accordingly.

The only difficulty is to take care of the shape of each matrix (before multiplying two matrices or vectors, test what their shape is).

Note: you can either edit directly the cell containing the definition of the class, or create a new class TrainableMLP inheriting from the class MLP and simply redefine the backprop() method. The solution will use the second option to be more readable, but it does not matter.

class TrainableMLP (MLP):

def backprop(self, x, t):

# Make sure x has 2 rows

x = np.array(x).reshape((self.d, -1))

# Output error

delta = (t - self.y)

# Hidden error

delta_hidden = np.dot(self.W2.T, delta) * self.h * (1. - self.h)

# Learn the output weights

self.W2 += self.eta * delta * self.h.T

# Learn the output bias

self.b2 += self.eta * delta

# Learn the hidden weights

self.W1 += self.eta * np.outer(delta_hidden, x)

# Learn the hidden biases

self.b1 += self.eta * delta_hidden

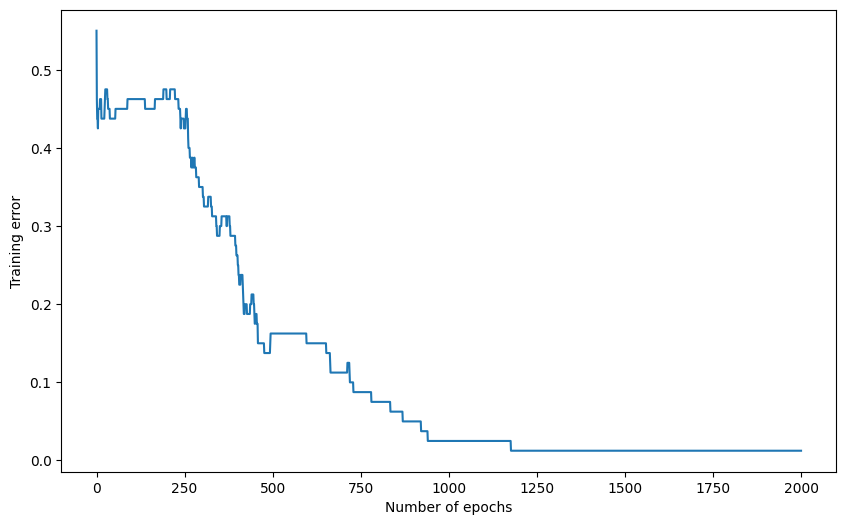

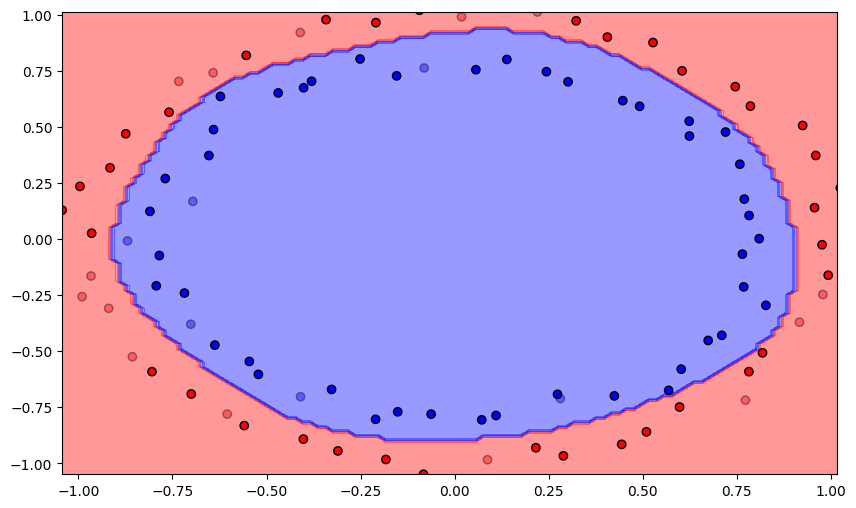

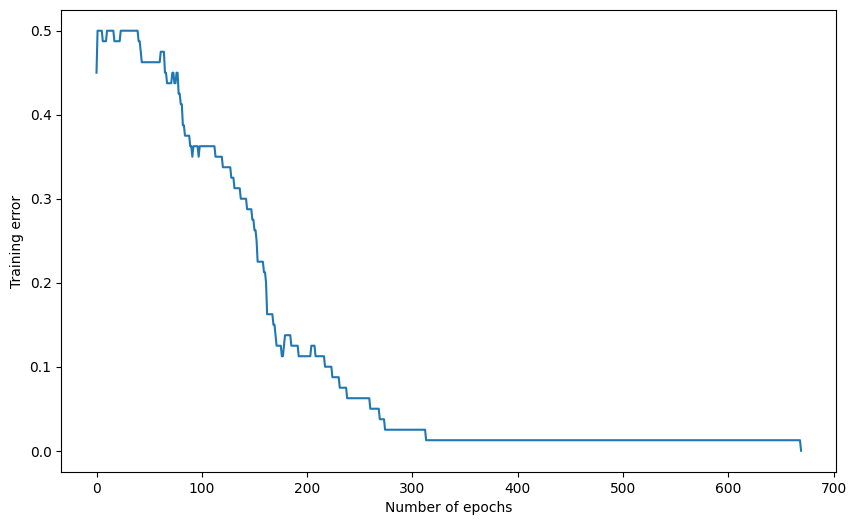

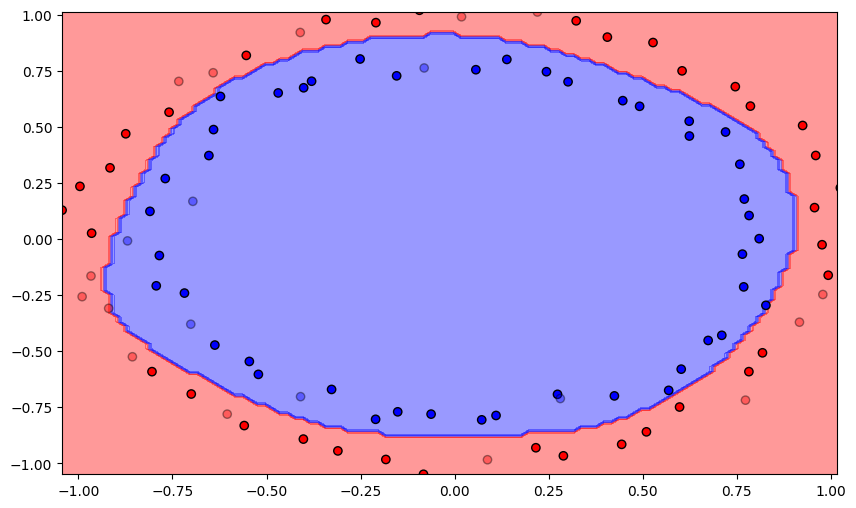

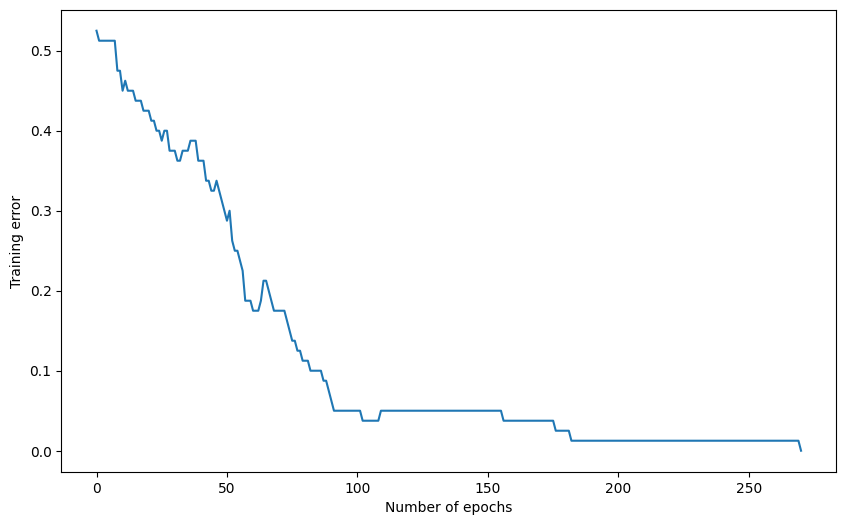

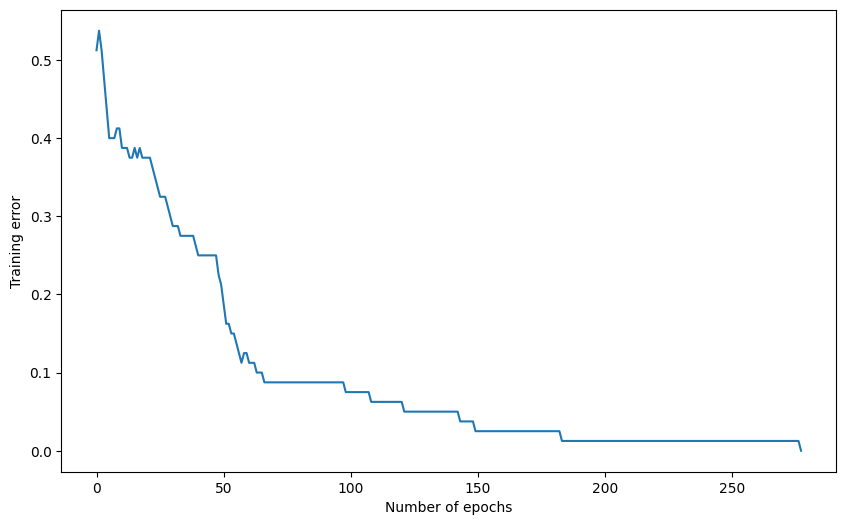

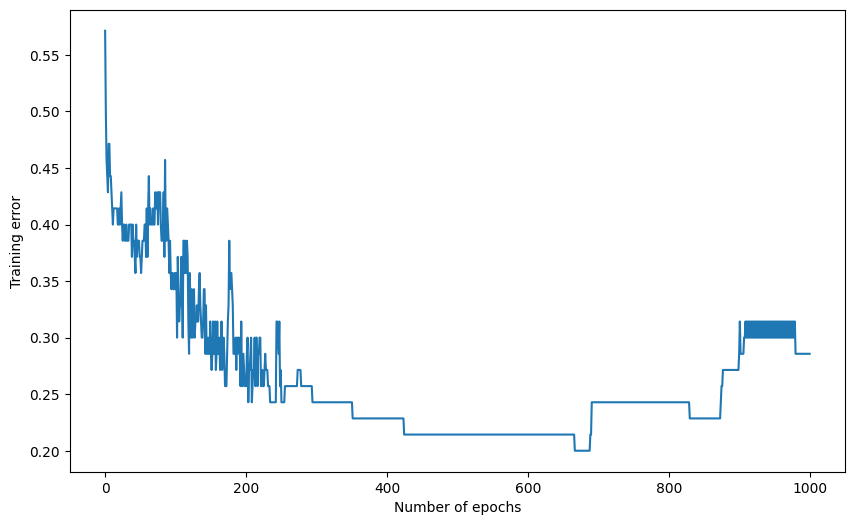

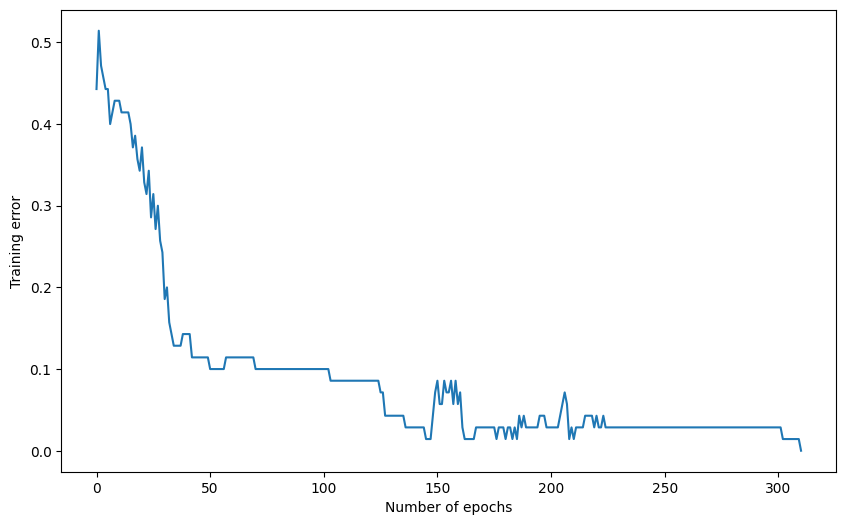

Q: Train the MLP for 1000 epochs on the data using a learning rate of 0.05, 15 hidden neurons and weights initialized between -1 and 1. Plot the evolution of the training error.

K = 15

max_val = 1.0

eta = 0.05

# Create the MLP

mlp = TrainableMLP(d, K, logistic, max_val, eta)

# Train the MLP

training_error, nb_epochs = mlp.train(X_train, t_train, 1000)

print('Number of epochs needed:', nb_epochs)

print('Training accuracy:', 1. - training_error[-1])

plt.figure(figsize=(10, 6))

plt.plot(training_error)

plt.xlabel("Number of epochs")

plt.ylabel("Training error")

plt.show()

Number of epochs needed: 670

Training accuracy: 1.0

Q: Use the test() method to compute the error on the test set. What is the test accuracy of your network after training? Compare it to the training accuracy.

test_error = mlp.test(X_test, t_test)

print('Test accuracy:', 1. - test_error)Test accuracy: 1.0Experiments

Influence of the learning rate

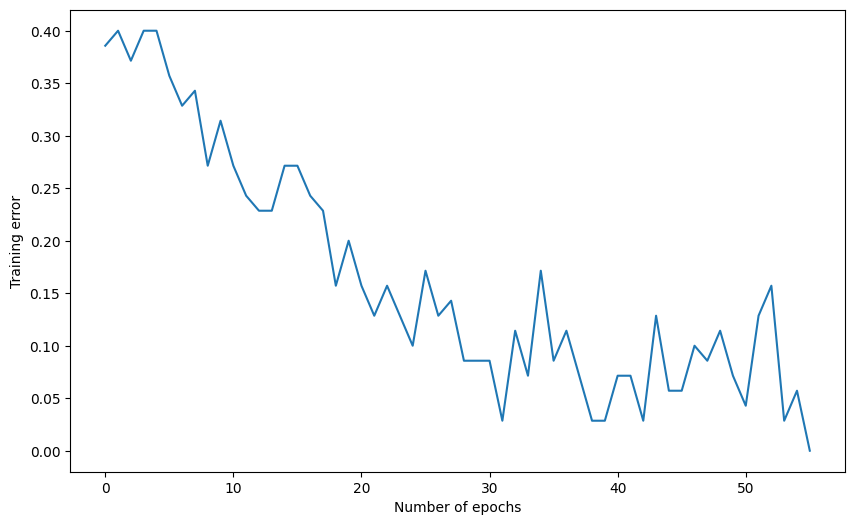

Q: Vary the learning rate between extreme values. How does the performance evolve?

K = 15

max_val = 1.0

eta = 0.2

# Create the MLP

mlp = TrainableMLP(d, K, logistic, max_val, eta)

# Train the MLP

training_error, nb_epochs = mlp.train(X_train, t_train, 1000)

# Test the MLP

test_error = mlp.test(X_test, t_test)

print('Number of epochs needed:', nb_epochs)

print('Training accuracy:', 1. - training_error[-1])

print('Test accuracy:', 1. - test_error)

plt.figure(figsize=(10, 6))

plt.plot(training_error)

plt.xlabel("Number of epochs")

plt.ylabel("Training error")

plt.show()

Number of epochs needed: 271

Training accuracy: 1.0

Test accuracy: 1.0

A: For such small networks and easy problems, a high learning rate of 0.2 works well (ca 200 epochs to converge). This won’t be true for deeper networks. Learning rates smaller than 0.01 take forever.

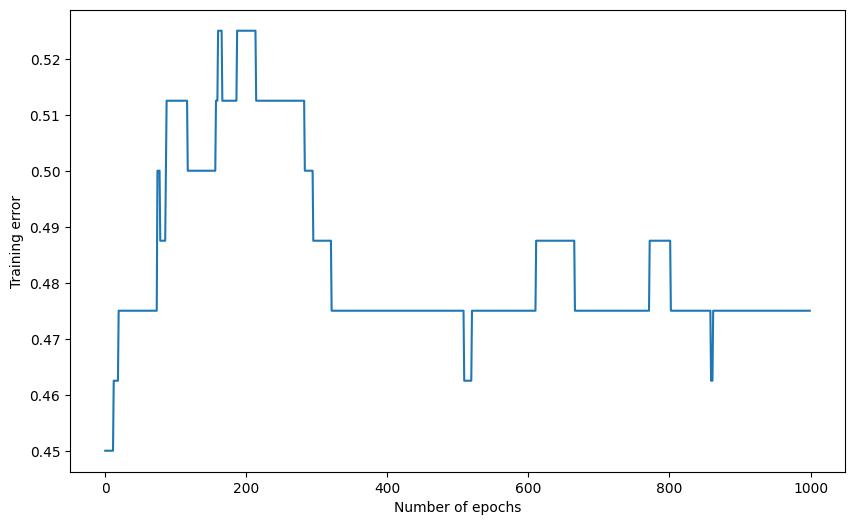

Influence of weight initialization

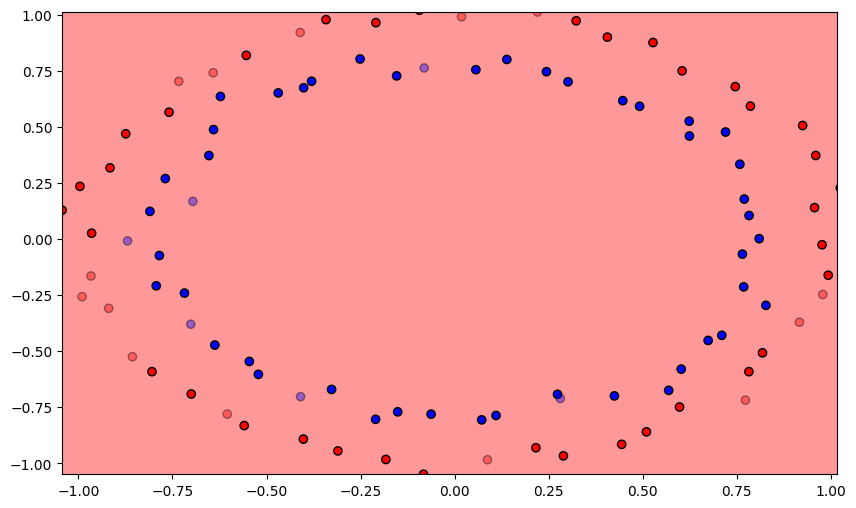

Q: The weights are initialized randomly between -1 and 1. Try to initialize them to 0. Does it work? Why?

K = 15

max_val = 0.0

eta = 0.1

# Create the MLP

mlp = TrainableMLP(d, K, logistic, max_val, eta)

# Train the MLP

training_error, nb_epochs = mlp.train(X_train, t_train, 1000)

# Test the MLP

test_error = mlp.test(X_test, t_test)

print('Number of epochs needed:', nb_epochs)

print('Training accuracy:', 1. - training_error[-1])

print('Test accuracy:', 1. - test_error)

plt.figure(figsize=(10, 6))

plt.plot(training_error)

plt.xlabel("Number of epochs")

plt.ylabel("Training error")

plt.show()

Number of epochs needed: 1000

Training accuracy: 0.525

Test accuracy: 0.30000000000000004

A: This is a simple example of vanishing gradient. The backpropagated error gets multiplied by W2, which is initially zero. There is therefore no backpropagated error, the first layer cannot learn anything for a quite long time.

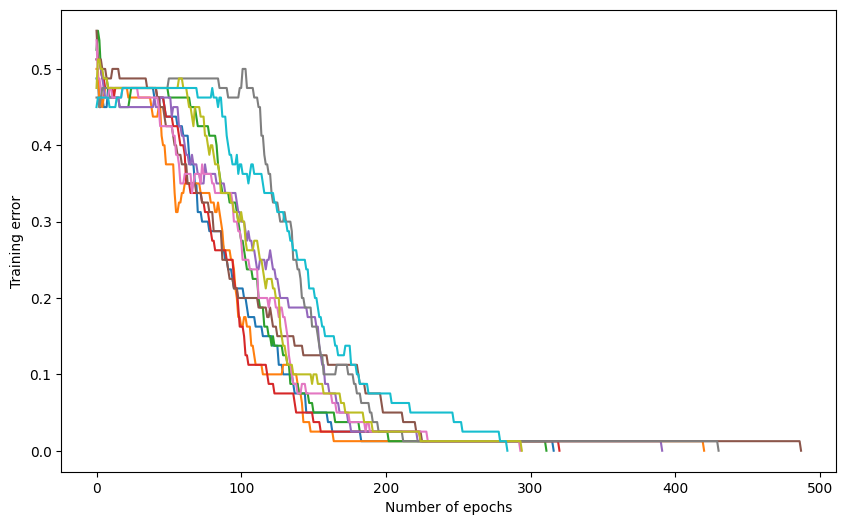

Q: For a fixed number of hidden neurons (e.g. K=15) and a correct value of eta, train 10 times the network with different initial weights and superimpose on the same plot the evolution of the training error. Conclude.

K = 15

max_val = 1.0

eta = 0.1

plt.figure(figsize=(10, 6))

for trial in range(10):

# Create the MLP

mlp = TrainableMLP(d, K, logistic, max_val, eta)

# Train the MLP

training_error, nb_epochs = mlp.train(X_train, t_train, 1000, visualize=False)

plt.plot(training_error)

plt.xlabel("Number of epochs")

plt.ylabel("Training error")

plt.show()

A: Because of the random initialization of the weights, some networks converge faster than others, as their weights are initially closer to the solution and gradient descent is an iterative method.

With the current configuration, a maximum of 1000 epochs is not too much, but we can clearly do better.

Influence of the transfer function

Q: Modify the backprop() method so that it applies backpropagation correctly for any of the four activation functions:

- linear

- logistic

- tanh

- relu

# Linear transfer function

def linear(x):

return x

# tanh transfer function

def tanh(x):

return np.tanh(x)

# ReLU transfer function

def relu(x):

x = x.copy()

x[x < 0.] = 0.

return xRemember that the derivatives of these activations functions are easy to compute:

- linear: f'(x) = 1

- logistic: f'(x) = f(x) \, (1 - f(x))

- tanh: f'(x) = 1 - f^2(x)

- relu: f'(x) = \begin{cases}1 \; \text{if} \; x>0\\ 0 \; \text{if} \; x \leq 0\\ \end{cases}

Hint: activation_function is a variable like others, although it is the name of a method. You can apply comparisons on it:

if self.activation_function == linear:

diff = something

elif self.activation_function == logistic:

diff = something

elif self.activation_function == tanh:

diff = something

elif self.activation_function == relu:

diff = somethingclass TrainableMLP (MLP):

def backprop(self, x, t):

# Make sure x has 2 rows

x = np.array(x).reshape((self.d, -1))

# Output error

delta = (t - self.y)

# Derivative of the transfer function

if self.activation_function == linear:

diff = 1.0

elif self.activation_function == logistic:

diff = self.h * (1. - self.h)

elif self.activation_function == tanh:

diff = 1. - self.h * self.h

elif self.activation_function == relu:

diff = self.h.copy()

diff[diff <= 0] = 0

diff[diff > 0] = 1

# Hidden error

delta_hidden = np.dot(self.W2.T, delta) * diff

# Learn the output weights

self.W2 += self.eta * delta * self.h.T

# Learn the output bias

self.b2 += self.eta * delta

# Learn the hidden weights

self.W1 += self.eta * np.outer(delta_hidden, x)

# Learn the hidden biases

self.b1 += self.eta * delta_hidden

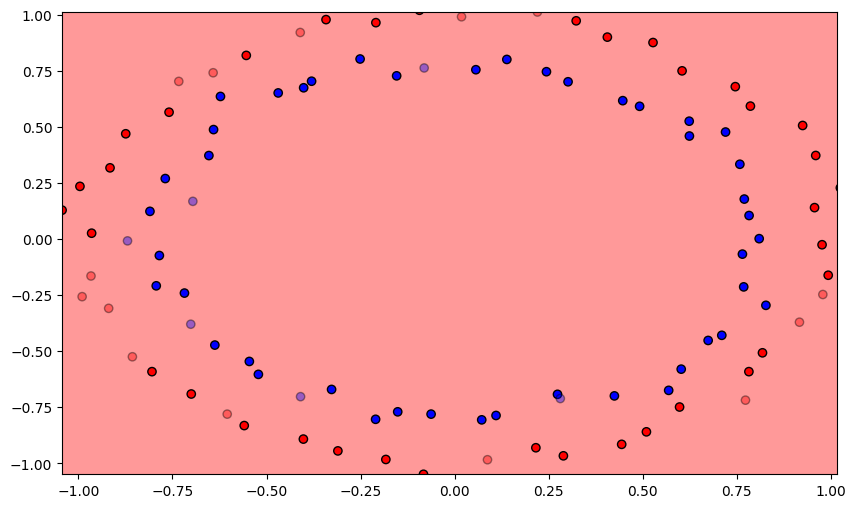

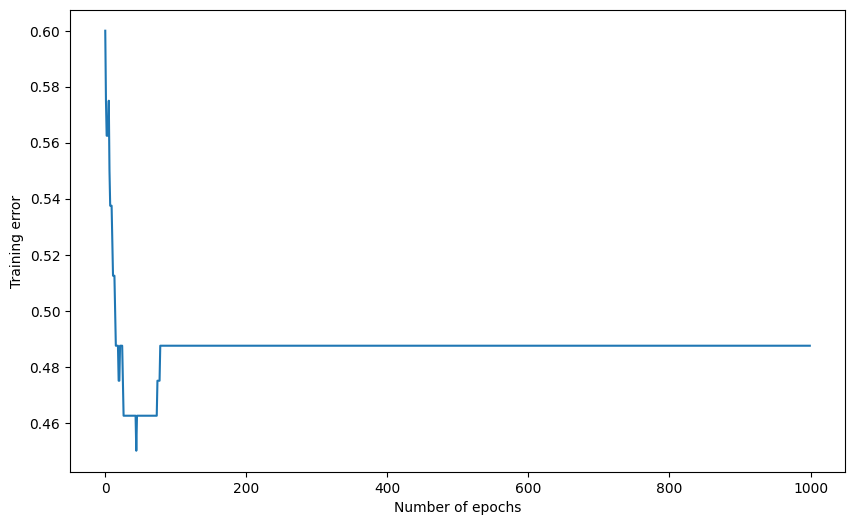

Q: Use a linear transfer function for the hidden neurons. How does performance evolve? Is the non-linearity of the transfer function important for learning?

K = 15

max_val = 1.0

eta = 0.1

activation_function=linear

# Create the MLP

mlp = TrainableMLP(d, K, activation_function, max_val, eta)

# Train the MLP

training_error, nb_epochs = mlp.train(X_train, t_train, 1000)

# Test the MLP

test_error = mlp.test(X_test, t_test)

print('Number of epochs needed:', nb_epochs)

print('Training accuracy:', 1. - training_error[-1])

print('Test accuracy:', 1. - test_error)

plt.figure(figsize=(10, 6))

plt.plot(training_error)

plt.xlabel("Number of epochs")

plt.ylabel("Training error")

plt.show()

Number of epochs needed: 1000

Training accuracy: 0.5125

Test accuracy: 0.30000000000000004

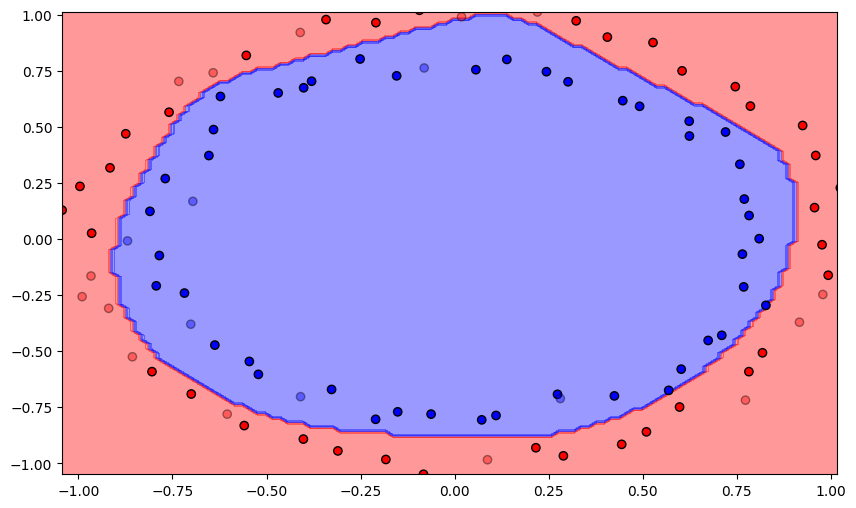

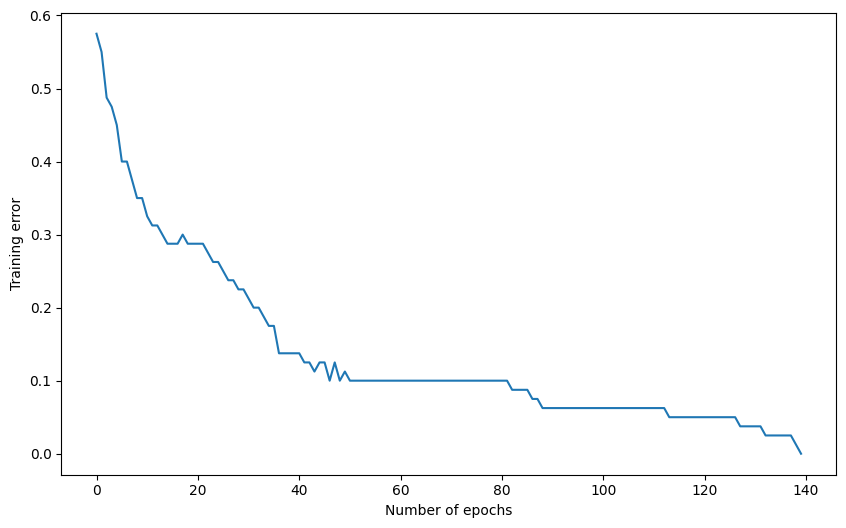

Q: Use this time the hyperbolic tangent function as a transfer function for the hidden neurons. Does it improve learning?

K = 15

max_val = 1.0

eta = 0.1

activation_function=tanh

# Create the MLP

mlp = TrainableMLP(d, K, activation_function, max_val, eta)

# Train the MLP

training_error, nb_epochs = mlp.train(X_train, t_train, 1000)

# Test the MLP

test_error = mlp.test(X_test, t_test)

print('Number of epochs needed:', nb_epochs)

print('Training accuracy:', 1. - training_error[-1])

print('Test accuracy:', 1. - test_error)

plt.figure(figsize=(10, 6))

plt.plot(training_error)

plt.xlabel("Number of epochs")

plt.ylabel("Training error")

plt.show()

Number of epochs needed: 278

Training accuracy: 1.0

Test accuracy: 1.0

Q: Use the Rectified Linear Unit (ReLU) transfer function. What does it change? Conclude on the importance of the transfer function for the hidden neurons. Select the best one from now on.

K = 15

max_val = 1.0

eta = 0.1

activation_function=relu

# Create the MLP

mlp = TrainableMLP(d, K, activation_function, max_val, eta)

# Train the MLP

training_error, nb_epochs = mlp.train(X_train, t_train, 1000)

# Test the MLP

test_error = mlp.test(X_test, t_test)

print('Number of epochs needed:', nb_epochs)

print('Training accuracy:', 1. - training_error[-1])

print('Test accuracy:', 1. - test_error)

plt.figure(figsize=(10, 6))

plt.plot(training_error)

plt.xlabel("Number of epochs")

plt.ylabel("Training error")

plt.show()

Number of epochs needed: 140

Training accuracy: 1.0

Test accuracy: 0.95

A: Using the linear function does not work at all, as the equivalent input/output model would be linear, and the data is non-linear. logistic and tanh work approximately in the same way. ReLU is the best.

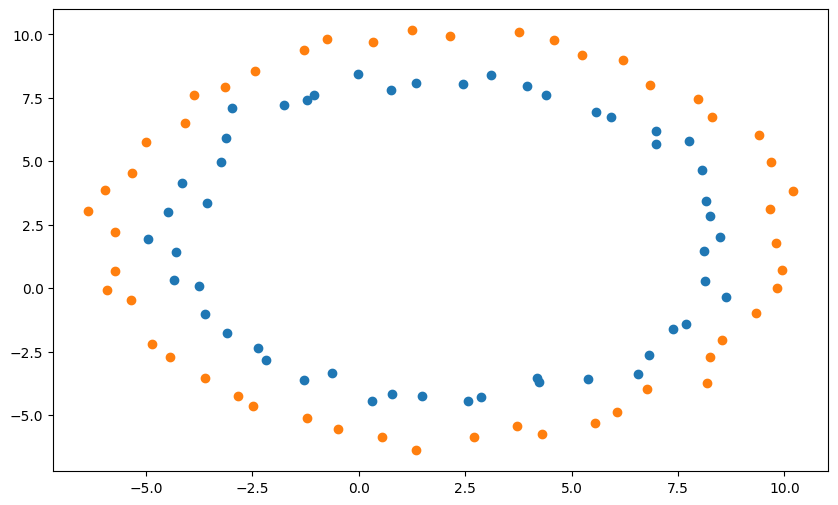

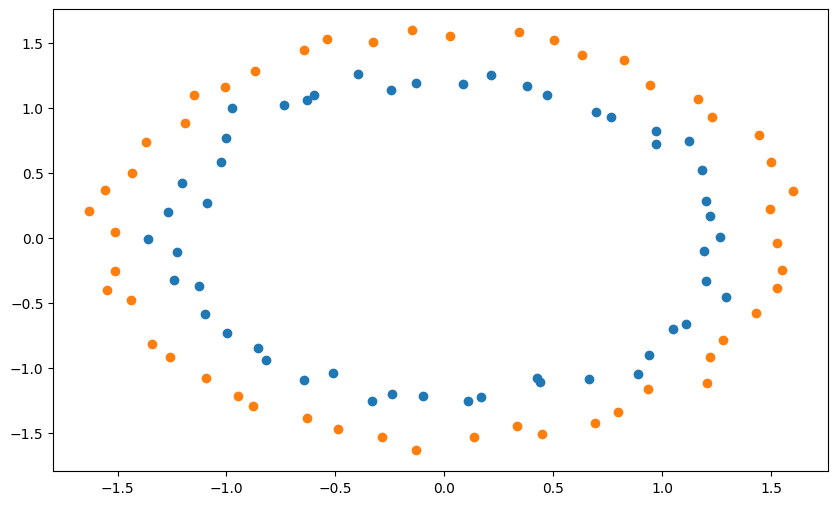

Influence of data normalization

The input data returned by make_circles is nicely center around 0, with values between -1 and 1. What happens if this is not the case with your data?

Q: Shift the input data X using the formula:

X_\text{shifted} = 8 \, X + 2

regenerate the training and test sets and train the MLP on them. What do you observe?

X_shifted = 8* X + 2

plt.figure(figsize=(10, 6))

plt.scatter(X_shifted[t==1, 0], X_shifted[t==1, 1])

plt.scatter(X_shifted[t==0, 0], X_shifted[t==0, 1])

plt.show()

X_train, X_test, t_train, t_test = train_test_split(X_shifted, t, test_size=.3, random_state=42)

K = 15

max_val = 1.0

eta = 0.1

activation_function=relu

# Create the MLP

mlp = TrainableMLP(d, K, activation_function, max_val, eta)

# Train the MLP

training_error, nb_epochs = mlp.train(X_train, t_train, 1000)

# Test the MLP

test_error = mlp.test(X_test, t_test)

print('Number of epochs needed:', nb_epochs)

print('Training accuracy:', 1. - training_error[-1])

print('Test accuracy:', 1. - test_error)

plt.figure(figsize=(10, 6))

plt.plot(training_error)

plt.xlabel("Number of epochs")

plt.ylabel("Training error")

plt.show()

Number of epochs needed: 1000

Training accuracy: 0.7142857142857143

Test accuracy: 0.6

A: It does not work anymore…

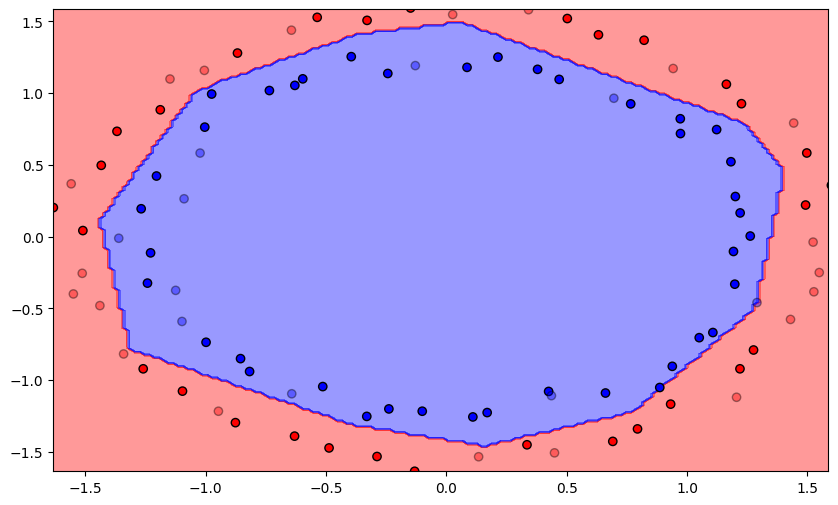

Q: Normalize the shifted data so that it has a mean of 0 and a variance of 1 in each dimension, using the formula:

X_\text{normalized} = \dfrac{X_\text{shifted} - \text{mean}(X_\text{shifted})}{\text{std}(X_\text{shifted})}

and retrain the network. Conclude.

X_scaled = (X_shifted - X_shifted.mean(axis=0))/(X_shifted.std(axis=0))

plt.figure(figsize=(10, 6))

plt.scatter(X_scaled[t==1, 0], X_scaled[t==1, 1])

plt.scatter(X_scaled[t==0, 0], X_scaled[t==0, 1])

plt.show()

X_train, X_test, t_train, t_test = train_test_split(X_scaled, t, test_size=.3, random_state=42)

K = 15

max_val = 1.0

eta = 0.1

activation_function=relu

# Create the MLP

mlp = TrainableMLP(d, K, activation_function, max_val, eta)

# Train the MLP

training_error, nb_epochs = mlp.train(X_train, t_train, 1000)

# Test the MLP

test_error = mlp.test(X_test, t_test)

print('Number of epochs needed:', nb_epochs)

print('Training accuracy:', 1. - training_error[-1])

print('Test accuracy:', 1. - test_error)

plt.figure(figsize=(10, 6))

plt.plot(training_error)

plt.xlabel("Number of epochs")

plt.ylabel("Training error")

plt.show()

Number of epochs needed: 311

Training accuracy: 1.0

Test accuracy: 0.9666666666666667

A: Now it works again. Conclusion: always normalize the data before training so that each input dimension has a mean of 0 and variance of 1. Refer batch normalization later.

Influence of randomization

The training loop we used until now iterated over the training samples in the exact same order at every epoch. The samples are therefore not i.i.d (independent and identically distributed) as they follow the same sequence.

Q: Modify the train() method so that the indices of the training samples are randomized between two epochs. Check the doc of rng.permutation() for help.

class StochasticMLP (TrainableMLP):

def train(self, X_train, t_train, nb_epochs, visualize=True):

errors = []

for epoch in range(nb_epochs):

nb_errors = 0

# Epoch

for i in rng.permutation(X_train.shape[0]):

# Feedforward pass: sets self.h and self.y

self.feedforward(X_train[i, :])

# Backpropagation

self.backprop(X_train[i, :], t_train[i])

# Predict the class:

if self.y[0, 0] > 0.5:

c = 1

else:

c = 0

# Count the number of misclassifications

if t_train[i] != c:

nb_errors += 1

# Compute the error rate

errors.append(nb_errors/X_train.shape[0])

# Plot the decision function every 10 epochs

if epoch % 10 == 0 and visualize:

self.plot_classification()

# Stop when the error rate is 0

if nb_errors == 0:

if visualize:

self.plot_classification()

break

return errors, epochK = 15

max_val = 1.0

eta = 0.1

activation_function=relu

# Create the MLP

mlp = StochasticMLP(d, K, activation_function, max_val, eta)

# Train the MLP

training_error, nb_epochs = mlp.train(X_train, t_train, 1000)

# Test the MLP

test_error = mlp.test(X_test, t_test)

print('Number of epochs needed:', nb_epochs)

print('Training accuracy:', 1. - training_error[-1])

print('Test accuracy:', 1. - test_error)

plt.figure(figsize=(10, 6))

plt.plot(training_error)

plt.xlabel("Number of epochs")

plt.ylabel("Training error")

plt.show()

Number of epochs needed: 55

Training accuracy: 1.0

Test accuracy: 0.8666666666666667

Influence of weight initialization - part 2

According to the empirical analysis by Glorot and Bengio in “Understanding the difficulty of training deep feedforward neural networks”, the optimal initial values for the weights between two layers of a MLP are uniformly taken in the range:

\mathcal{U} ( - \sqrt{\frac{6}{N_{\text{in}}+N_{\text{out}}}} , \sqrt{\frac{6}{N_{\text{in}}+N_{\text{out}}}} )

where N_{\text{in}} is the number of neurons in the first layer and N_{\text{out}} the number of neurons in the second layer.

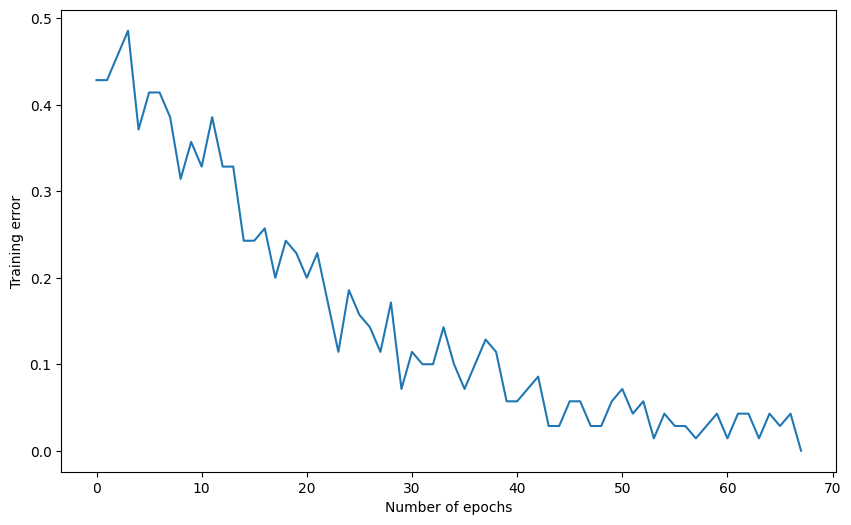

Q: Modify the constructor of your class to initialize both hidden and output weights with this new range. The biases should be initialized to 0. What is the effect?

class GlorotMLP (StochasticMLP):

def __init__(self, d, K, activation_function, eta):

self.d = d

self.K = K

self.activation_function = activation_function

self.eta = eta

max_val = np.sqrt(6./(d+K))

self.W1 = rng.uniform(-max_val, max_val, (K, d))

self.b1 = np.zeros((K, 1))

max_val = np.sqrt(6./(K+1))

self.W2 = rng.uniform(-max_val, max_val, (1, K))

self.b2 = np.zeros((1, 1))K = 15

eta = 0.1

activation_function=relu

# Create the MLP

mlp = GlorotMLP(d, K, activation_function, eta)

# Train the MLP

training_error, nb_epochs = mlp.train(X_train, t_train, 1000)

# Test the MLP

test_error = mlp.test(X_test, t_test)

print('Number of epochs needed:', nb_epochs)

print('Training accuracy:', 1. - training_error[-1])

print('Test accuracy:', 1. - test_error)

plt.figure(figsize=(10, 6))

plt.plot(training_error)

plt.xlabel("Number of epochs")

plt.ylabel("Training error")

plt.show()

Number of epochs needed: 67

Training accuracy: 1.0

Test accuracy: 0.8666666666666667

A: The decision function seems already quite centered at the beginning (closer to the solution), so it converges faster.

Summary

Q: Now that we optimized the MLP, it is time to cross-validate again the number of hidden neurons and the learning rate. As the networks always get a training error rate of 0 and the test set is not very relevant, the main criteria will be the number of epochs needed on average to converge. Find the best MLP for the dataset (there is not a single solution), for example by iterating over multiple values of K and eta (grid search). What do you think of the change in performance between the first naive implementation and the final one? What were the most critical changes?

activation_function = relu

def run(K, eta):

# Create the MLP

mlp = GlorotMLP(d, K, activation_function, eta)

# Train the MLP

training_error, nb_epochs = mlp.train(X_train, t_train, 1000, visualize=False)

# Test the MLP

test_error = mlp.test(X_test, t_test)

print('K:', K, 'eta:', eta)

print('Number of epochs needed:', nb_epochs)

print('Training accuracy:', 1. - training_error[-1])

print('Test accuracy:', 1. - test_error)

print('-'*20)

return 1. - test_error

best_test_accuracy = 0.0

best_K, best_eta = 0, 0

for K in [10, 15, 20, 25]:

for eta in [0.01, 0.05, 0.1, 0.2, 0.3]:

acc = run(K, eta)

if acc > best_test_accuracy:

best_test_accuracy = acc

best_K, best_eta = K, eta

print("Best hyperparameters: K =", best_K, "; eta = ", best_eta)K: 10 eta: 0.01

Number of epochs needed: 222

Training accuracy: 1.0

Test accuracy: 1.0

--------------------

K: 10 eta: 0.05

Number of epochs needed: 88

Training accuracy: 1.0

Test accuracy: 0.9

--------------------

K: 10 eta: 0.1

Number of epochs needed: 47

Training accuracy: 1.0

Test accuracy: 0.7666666666666666

--------------------

K: 10 eta: 0.2

Number of epochs needed: 76

Training accuracy: 1.0

Test accuracy: 1.0

--------------------

K: 10 eta: 0.3

Number of epochs needed: 89

Training accuracy: 1.0

Test accuracy: 0.9666666666666667

--------------------

K: 15 eta: 0.01

Number of epochs needed: 370

Training accuracy: 1.0

Test accuracy: 0.8

--------------------

K: 15 eta: 0.05

Number of epochs needed: 89

Training accuracy: 1.0

Test accuracy: 0.7666666666666666

--------------------

K: 15 eta: 0.1

Number of epochs needed: 74

Training accuracy: 1.0

Test accuracy: 0.9

--------------------

K: 15 eta: 0.2

Number of epochs needed: 54

Training accuracy: 1.0

Test accuracy: 0.9333333333333333

--------------------

K: 15 eta: 0.3

Number of epochs needed: 78

Training accuracy: 1.0

Test accuracy: 0.9

--------------------

K: 20 eta: 0.01

Number of epochs needed: 203

Training accuracy: 1.0

Test accuracy: 0.8333333333333334

--------------------

K: 20 eta: 0.05

Number of epochs needed: 129

Training accuracy: 1.0

Test accuracy: 0.8

--------------------

K: 20 eta: 0.1

Number of epochs needed: 54

Training accuracy: 1.0

Test accuracy: 0.8

--------------------

K: 20 eta: 0.2

Number of epochs needed: 61

Training accuracy: 1.0

Test accuracy: 0.9666666666666667

--------------------

K: 20 eta: 0.3

Number of epochs needed: 73

Training accuracy: 1.0

Test accuracy: 0.9666666666666667

--------------------

K: 25 eta: 0.01

Number of epochs needed: 185

Training accuracy: 1.0

Test accuracy: 0.8

--------------------

K: 25 eta: 0.05

Number of epochs needed: 105

Training accuracy: 1.0

Test accuracy: 0.8

--------------------

K: 25 eta: 0.1

Number of epochs needed: 79

Training accuracy: 1.0

Test accuracy: 0.9666666666666667

--------------------

K: 25 eta: 0.2

Number of epochs needed: 54

Training accuracy: 1.0

Test accuracy: 0.9333333333333333

--------------------

K: 25 eta: 0.3

Number of epochs needed: 93

Training accuracy: 1.0

Test accuracy: 1.0

--------------------

Best hyperparameters: K = 10 ; eta = 0.01A: Quite small changes can drastically change the performance of the network, both in terms of accuracy and training time. Data normalization, Glorot initialization and the use of ReLU are the most determinant here.