2. Linear Regression¶

Slides: pdf

2.1. Linear regression¶

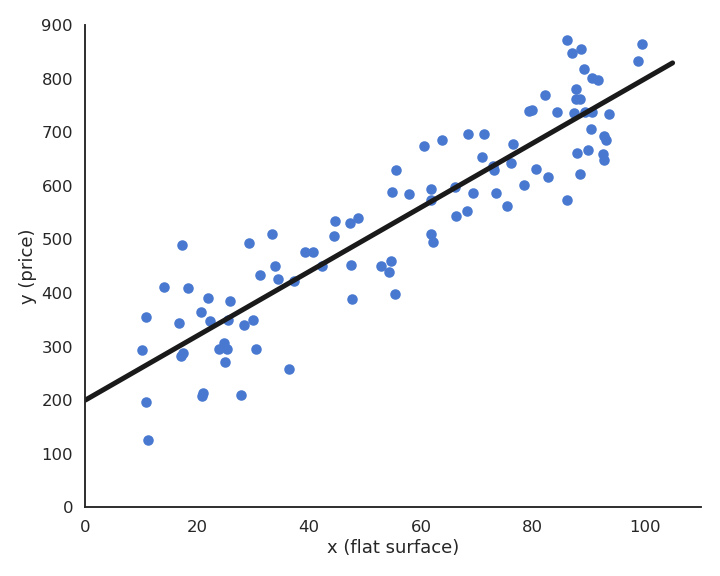

Fig. 2.23 Simple linear regression. \(x\) is the input, \(y\) the output. The data is represented by blue dots, the model by the black line.¶

Let’s consider a training set of N examples \(\mathcal{D} = (x_i, t_i)_{i=1..N}\). In linear regression, we want to learn a linear model (hypothesis) \(y\) that is linearly dependent on the input \(x\):

The free parameters of the model are the slope \(w\) and the intercept \(b\). This model corresponds to a single artificial neuron with output \(y\), having one input \(x\), one weight \(w\), one bias \(b\) and a linear activation function \(f(x) = x\).

Fig. 2.24 Artificial neuron with multiple inputs.¶

The goal of the linear regression (or least mean squares - LMS) is to minimize the mean square error (mse) between the targets and the predictions. This loss function is defined as the mathematical expectation of the quadratic error over the training set:

As the training set is finite and the samples i.i.d, we can simply replace the expectation by an average over the training set:

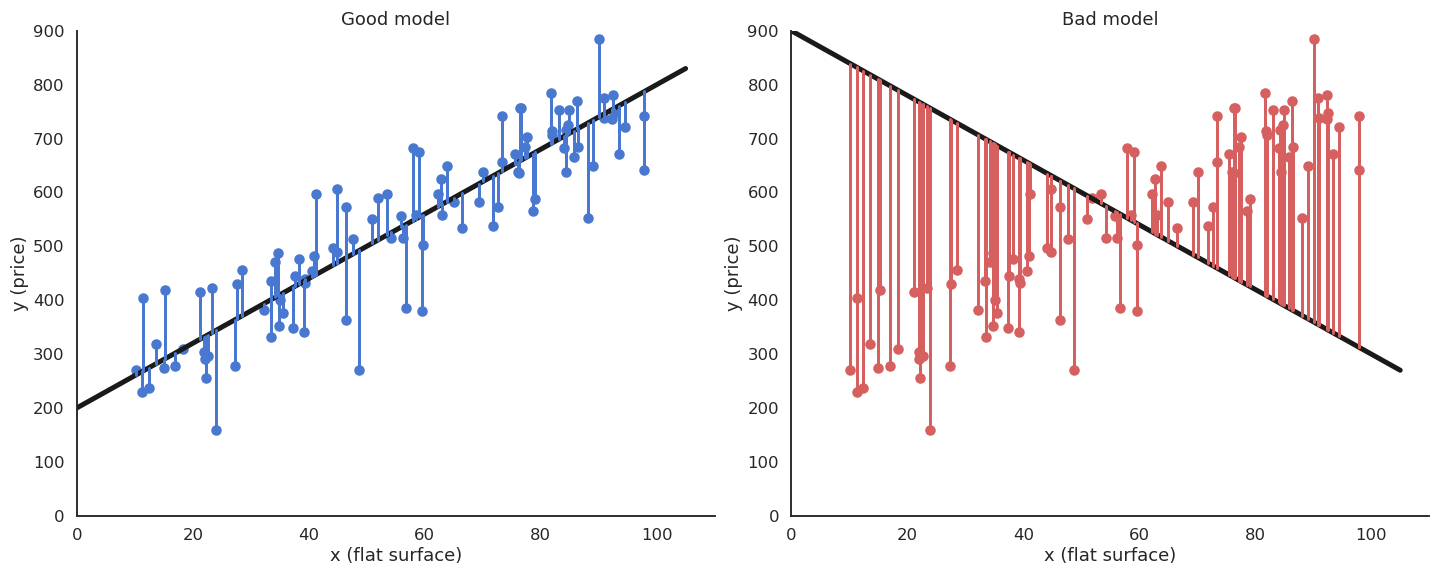

The minimum of the mse is achieved when the prediction \(y_i = f_{w, b}(x_i)\) is equal to the true value \(t_i\) for all training examples. In other words, we want to minimize the residual error of the model on the data. It is not always possible to obtain the global minimum (0) as the data may be noisy, but the closer, the better.

Fig. 2.25 A good fit to the data is when the prediction \(y_i\) (on the line) is close to the data \(t_i\) for all training examples.¶

2.1.1. Least Mean Squares¶

We search for \(w\) and \(b\) which minimize the mean square error:

We will apply gradient descent to iteratively modify estimates of \(w\) and \(b\):

Let’s search for the partial derivative of the mean square error with respect to \(w\):

Partial derivatives are linear, so the derivative of a sum is the sum of the derivatives:

This means we can compute a gradient for each training example instead of for the whole training set (see later the distinction batch/online):

The individual loss \(\mathcal{l}_i(w, b) = (t_i - y_i )^2\) is the composition of two functions:

a square error function \(g_i(y_i) = (t_i - y_i)^2\).

the prediction \(y_i = f_{w, b}(x_i) = w \, x_i + b\).

The chain rule tells us how to derive such composite functions:

The first derivative considers \(g(x)\) to be a single variable. Applied to our problem, this gives:

The square error function \(g_i(y) = (t_i - y)^2\) is easy to differentiate w.r.t \(y\):

The prediction \(y_i = w \, x_i + b\) also w.r.t \(w\) and \(b\):

The partial derivative of the individual loss is:

This gives us:

Gradient descent is then defined by the learning rules (absorbing the 2 in \(\eta\)):

Least Mean Squares (LMS) or Ordinary Least Squares (OLS) is a batch algorithm: the parameter changes are computed over the whole dataset.

The parameter changes have to be applied multiple times (epochs) in order for the parameters to converge. One can stop when the parameters do not change much, or after a fixed number of epochs.

LMS algorithm

\(w=0 \quad;\quad b=0\)

for M epochs:

\(dw=0 \quad;\quad db=0\)

for each sample \((x_i, t_i)\):

\(y_i = w \, x_i + b\)

\(dw = dw + (t_i - y_i) \, x_i\)

\(db = db + (t_i - y_i)\)

\(\Delta w = \eta \, \frac{1}{N} dw\)

\(\Delta b = \eta \, \frac{1}{N} db\)

Fig. 2.26 Visualization of least mean squares applied to a simple regression problem with \(\eta=0.1\). Each step of the animation corresponds to one epoch (iteration over the training set).¶

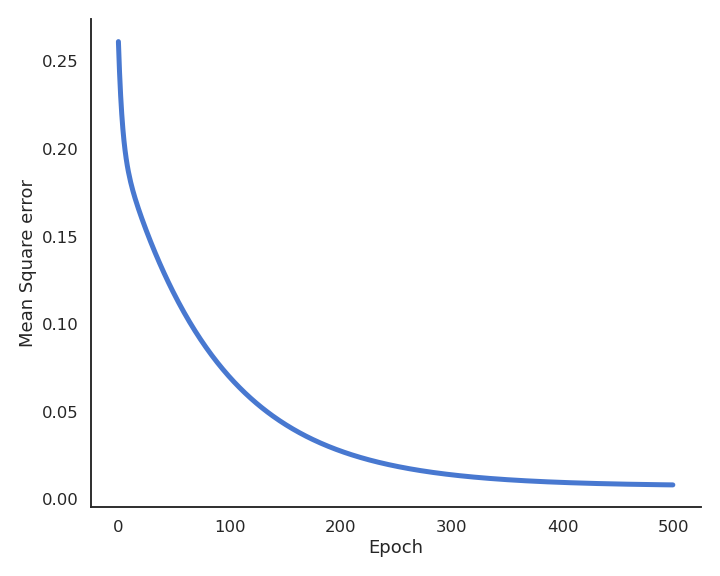

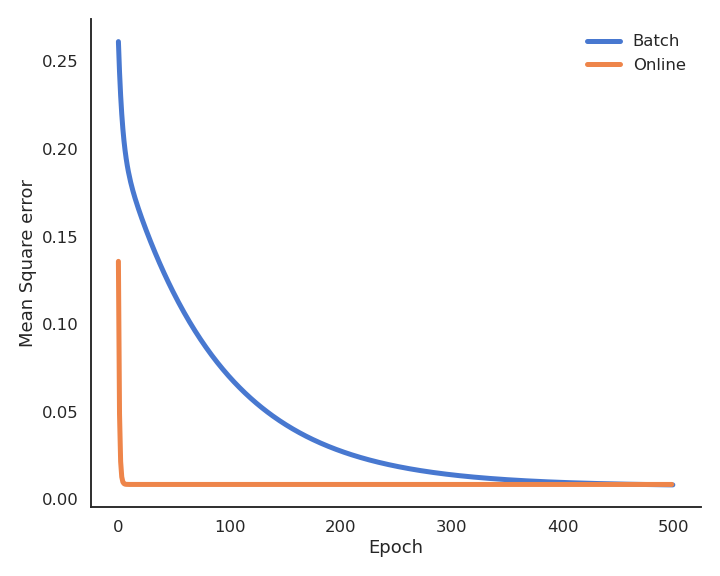

During learning, the mean square error (mse) decreases with the number of epochs but does not reach zero because of the noise in the data.

Fig. 2.27 Evolution of the loss function during training.¶

2.1.2. Delta learning rule¶

LMS is very slow, because it changes the weights only after the whole training set has been evaluated. It is also possible to update the weights immediately after each example using the delta learning rule, which is the online version of LMS:

Delta learning rule

\(w=0 \quad;\quad b=0\)

for M epochs:

for each sample \((x_i, t_i)\):

\(y_i = w \, x_i + b\)

\(\Delta w = \eta \, (t_i - y_i ) \, x_i\)

\(\Delta b = \eta \, (t_i - y_i)\)

The batch version is more stable, but the online version is faster: the weights have already learned something when arriving at the end of the first epoch. Note that the loss function is slightly higher at the end of learning (see Exercise 3 for a deeper discussion).

Fig. 2.28 Visualization of the delta learning rule applied to a simple regression problem with \(\eta = 0.1\). Each step of the animation corresponds to one epoch (iteration over the training set).¶

Fig. 2.29 Evolution of the loss function during training. With the same learning rate, the delta learning rule converges much faster but reaches a poorer minimum. Lowering the learning rate slows down learning but reaches a better minimum.¶

2.2. Multiple linear regression¶

The key idea of linear regression (one input \(x\), one output \(y\)) can be generalized to multiple inputs and outputs.

Multiple Linear Regression (MLR) predicts several output variables based on several explanatory variables:

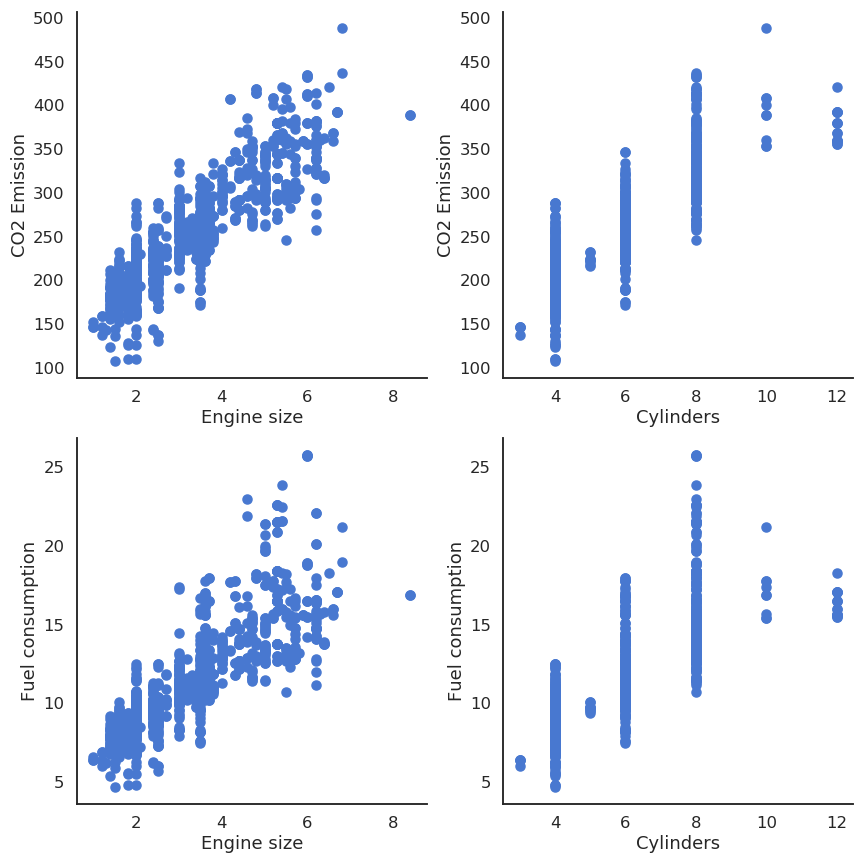

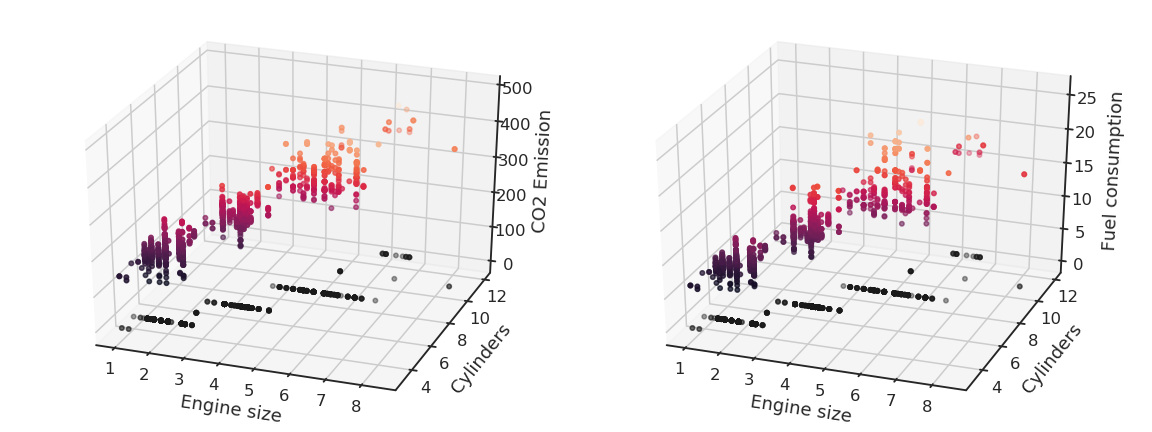

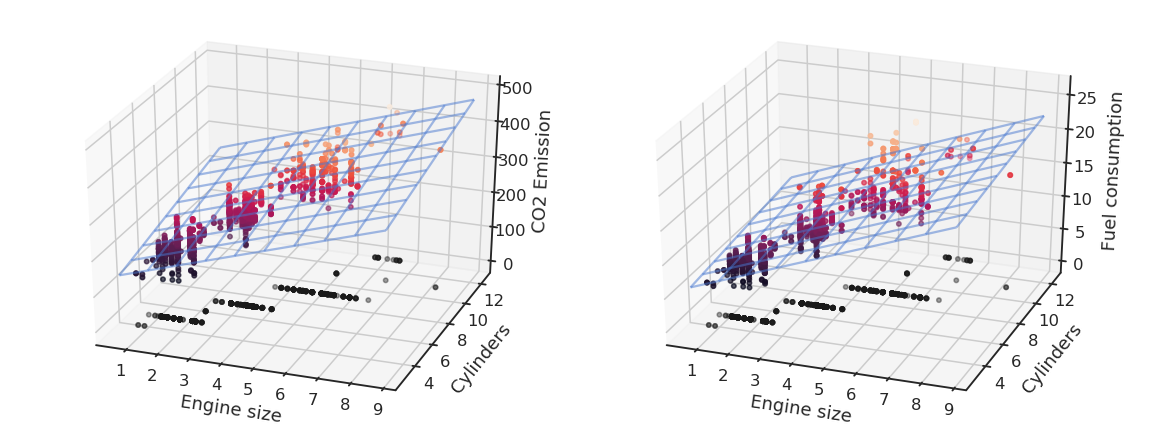

Example: fuel consumption and CO2 emissions

Let’s suppose you have 13971 measurements in some Excel file, linking engine size, number of cylinders, fuel consumption and CO2 emissions of various cars. You want to predict fuel consumption and CO2 emissions when you know the engine size and the number of cylinders.

Engine size |

Cylinders |

Fuel consumption |

CO2 emissions |

|---|---|---|---|

2 |

4 |

8.5 |

196 |

2.4 |

4 |

9.6 |

221 |

1.5 |

4 |

5.9 |

136 |

3.5 |

6 |

11 |

255 |

… |

… |

… |

… |

Fig. 2.30 CO2 emissions and fuel consumption depend almost linearly on the engine size and number of cylinders.¶

Fig. 2.31 CO2 emissions and fuel consumption depend almost linearly on the engine size and number of cylinders.¶

We can notice that the output variables seem to linearly depend on the inputs. Noting the input variables \(x_1\), \(x_2\) and the output ones \(y_1\), \(y_2\), we can define our problem as a multiple linear regression:

and solve it using the least mean squares method by minimizing the mse between the model and the data.

Fig. 2.32 The result of MLR is a plane in the input space.¶

Note

Using the Python library scikit-learn (https://scikit-learn.org), this is done in two lines of code:

from sklearn.linear_model import LinearRegression

reg = LinearRegression().fit(X, y)

The system of equations:

can be put in a matrix-vector form:

We simply create the corresponding vectors and matrices:

\(\mathbf{x}\) is the input vector, \(\mathbf{y}\) is the output vector, \(\mathbf{t}\) is the target vector. \(W\) is called the weight matrix and \(\mathbf{b}\) the bias vector.

The model is now defined by:

The problem is exactly the same as before, except that we use vectors and matrices instead of scalars: \(\mathbf{x}\) and \(\mathbf{y}\) can have any number of dimensions, the same procedure will apply. This corresponds to a linear neural network (or linear perceptron), with one output neuron per predicted value \(y_i\) using the linear activation function.

Fig. 2.33 A linear perceptron is a single layer of artificial neurons. The output vector \(\mathbf{y}\) is compared to the ground truth vector \(\mathbf{t}\) using the mse loss.¶

The mean square error still needs to be a scalar in order to be minimized. We can define it as the squared norm of the error vector:

In order to apply gradient descent, one needs to calculate partial derivatives w.r.t the weight matrix \(W\) and the bias vector \(\mathbf{b}\), i.e. gradients:

Note

Some more advanced linear algebra becomes important to know how to compute these gradients:

https://web.stanford.edu/class/cs224n/readings/gradient-notes.pdf

We search the minimum of the mse loss function:

The individual loss function \(\mathcal{l}_i(W, \mathbf{b})\) is the squared \(\mathcal{L}^2\)-norm of the error vector, what can be expressed as a dot product or a vector multiplication:

Note

Remember:

The chain rule tells us in principle that:

The gradient w.r.t the output vector \(\mathbf{y}_i\) is quite easy to obtain, as it a quadratic function of \(\mathbf{t}_i - \mathbf{y}_i\):

The proof relies on product differentiation \((f\times g)' = f' \, g + f \, g'\):

Note

We use the properties \(\nabla_{\mathbf{x}}\, \mathbf{x}^T \times \mathbf{z} = \mathbf{z}\) and \(\nabla_{\mathbf{z}} \, \mathbf{x}^T \times \mathbf{z} = \mathbf{x}\) to get rid of the transpose.

The “problem” is when computing \(\nabla_{W} \, \mathbf{y}_i = \nabla_{W} \, (W \times \mathbf{x}_i + \mathbf{b})\):

\(\mathbf{y}_i\) is a vector and \(W\) a matrix.

\(\nabla_{W} \, \mathbf{y}_i\) is then a Jacobian (matrix), not a gradient (vector).

Intuitively, differentiating \(W \times \mathbf{x}_i + \mathbf{b}\) w.r.t \(W\) should return \(\mathbf{x}_i\), but it is a vector, not a matrix…

Actually, only the gradient (or Jacobian) of \(\mathcal{l}_i(W, \mathbf{b})\) w.r.t \(W\) should be a matrix of the same size as \(W\) so that we can apply gradient descent:

We already know that:

If \(\mathbf{x}_i\) has \(n\) elements and \(\mathbf{y}_i\) \(m\) elements, \(W\) is a \(m \times n\) matrix.

Note

Remember the outer product between two vectors:

It is easy to see that the outer product between \((\mathbf{t}_i - \mathbf{y}_i)\) and \(\mathbf{x}_i\) gives a \(m \times n\) matrix:

Let’s prove it element per element on a small matrix:

The Jacobian w.r.t \(W\) can be explicitly formed using partial derivatives:

We can rearrange this matrix as an outer product:

Multiple linear regression

Batch version:

Online version (delta learning rule):

The matrix-vector notation is completely equivalent to having one learning rule per parameter:

Note

The delta learning rule is always of the form: \(\Delta w\) = eta * error * input. Biases have an input of 1.

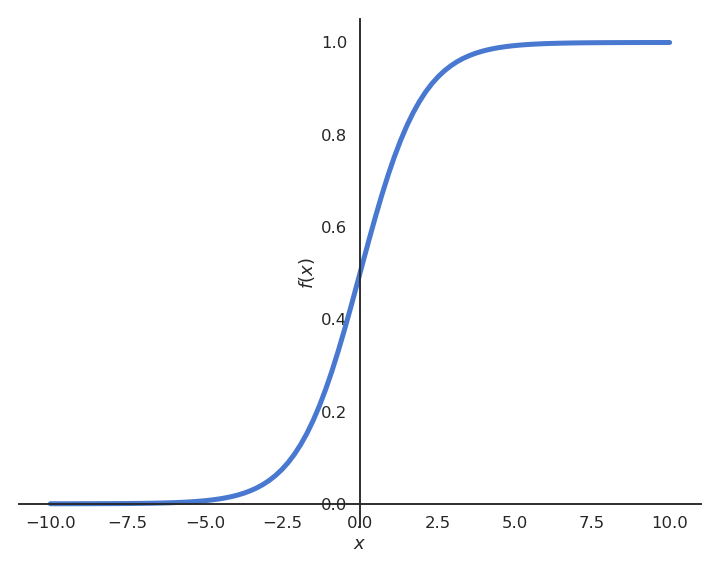

2.3. Logistic regression¶

Let’s suppose we want to perform a regression, but where the outputs \(t_i\) are bounded between 0 and 1. We could use a logistic (or sigmoid) function instead of a linear function in order to transform the input into an output:

Fig. 2.34 Logistic or sigmoid function \(\sigma(x)=\displaystyle\frac{1}{1+\exp(-x)}\).¶

By definition of the logistic function, the prediction \(y\) will be bounded between 0 and 1, what matches the targets \(t\). Let’s now apply gradient descent on the mse loss using this new model. The individual loss will be:

The partial derivative of the individual loss is easy to find using the chain rule:

The non-linear transfer function \(\sigma(x)\) therefore adds its derivative into the gradient:

The logistic function \(\sigma(x)=\frac{1}{1+\exp(-x)}\) has the nice property that its derivative can be expressed easily:

Note

Here is the proof using the fact that the derivative of \(\displaystyle\frac{1}{f(x)}\) is \(\displaystyle\frac{- f'(x)}{f^2(x)}\) :

The delta learning rule for the logistic regression model is therefore easy to obtain:

Generalized form of the delta learning rule

Fig. 2.35 Artificial neuron with multiple inputs.¶

For a linear perceptron with parameters \(W\) and \(\mathbf{b}\) and any activation function \(f\):

and the mse loss function:

the delta learning rule has the form:

In the linear case, \(f'(x) = 1\). One can use any non-linear function, e.g hyperbolic tangent tanh(), ReLU, etc. Transfer functions are chosen for neural networks so that we can compute their derivative easily.

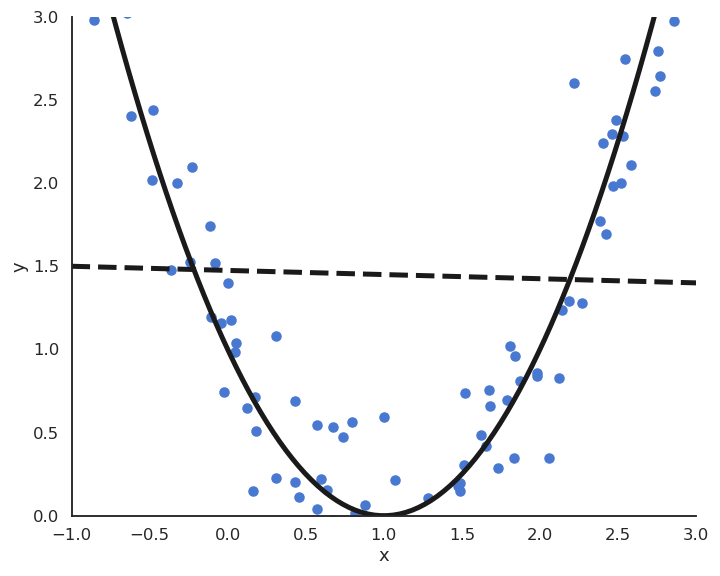

2.4. Polynomial regression¶

Fig. 2.36 Polynomial regression.¶

The functions underlying real data are rarely linear plus some noise around the ideal value. In the figure above, the input/output function is better modeled by a second-order polynomial:

We can transform the input into a vector of coordinates:

The problem becomes:

We can simply apply multiple linear regression (MLR) to find \(\mathbf{w}\) and b:

This generalizes to polynomials of any order \(p\):

We create a vector of powers of \(x\):

ad apply multiple linear regression (MLR) to find \(\mathbf{w}\) and b:

Non-linear problem solved! The only unknown is which order for the polynomial matches best the data. One can perform regression with any kind of parameterized function using gradient descent.