5. Semantic segmentation¶

Slides: pdf

5.1. Semantic segmentation¶

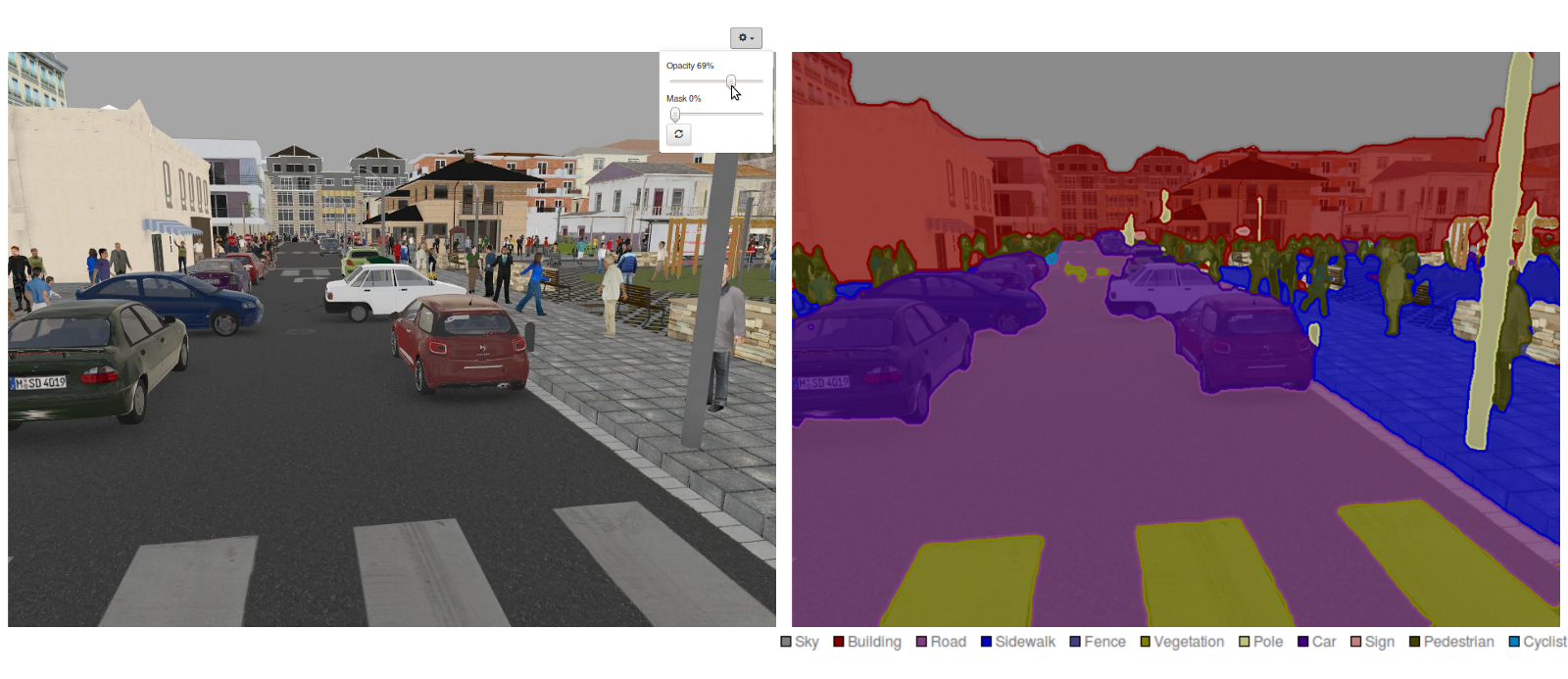

Semantic segmentation is a class of segmentation methods where you use knowledge about the identity of objects to partition the image pixel-per-pixel.

Fig. 5.7 Semantic segmentation. Source : https://medium.com/nanonets/how-to-do-image-segmentation-using-deep-learning-c673cc5862ef¶

Classical segmentation methods only rely on the similarity between neighboring pixels, they do not use class information. The output of a semantic segmentation is another image, where each pixel represents the class.

The classes can be binary, for example foreground/background, person/not, etc. Semantic segmentation networks are used for example in Youtube stories to add virtual backgrounds (background matting).

Fig. 5.8 Virtual backgrounds. Source: https://ai.googleblog.com/2018/03/mobile-real-time-video-segmentation.html¶

Clothes can be segmented to allow for virtual try-ons.

Fig. 5.9 Virtual try-ons. Source: [Wang et al., 2018].¶

There are many datasets freely available, but annotating such data is very painful, expensive and error-prone.

PASCAL VOC 2012 Segmentation Competition

COCO 2018 Stuff Segmentation Task

BDD100K: A Large-scale Diverse Driving Video Database

Cambridge-driving Labeled Video Database (CamVid)

Cityscapes Dataset

Mapillary Vistas Dataset

ApolloScape Scene Parsing

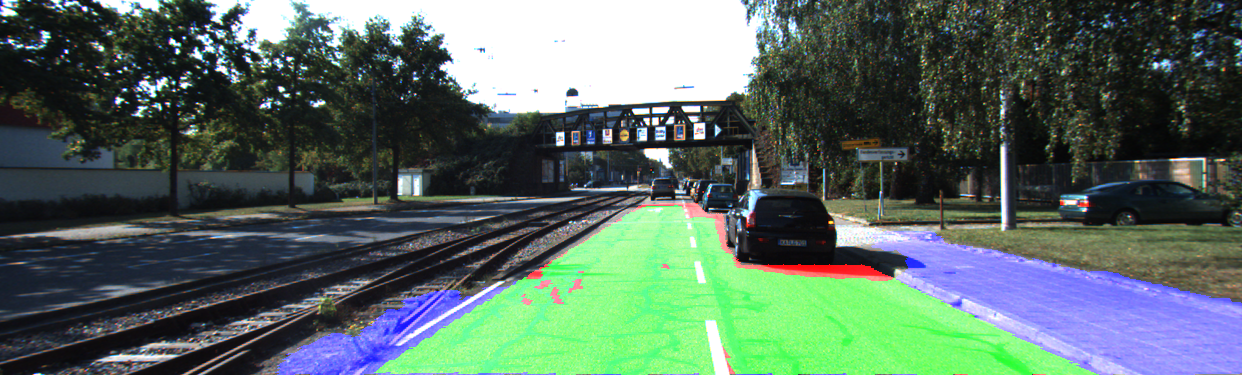

KITTI pixel-level semantic segmentation

Fig. 5.10 Semantic segmentation on the KITTI dataset. Source: http://www.cvlibs.net/datasets/kitti/¶

5.1.1. Output encoding¶

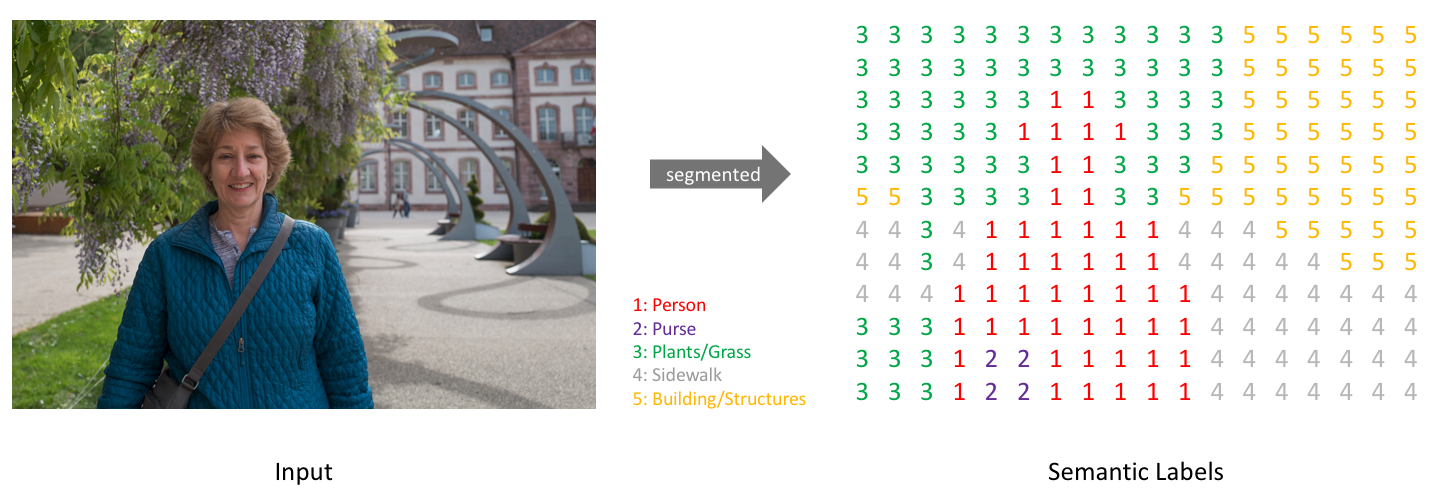

Each pixel of the input image is associated to a label (as in classification).

Fig. 5.11 Semantic labels. Source : https://medium.com/nanonets/how-to-do-image-segmentation-using-deep-learning-c673cc5862ef¶

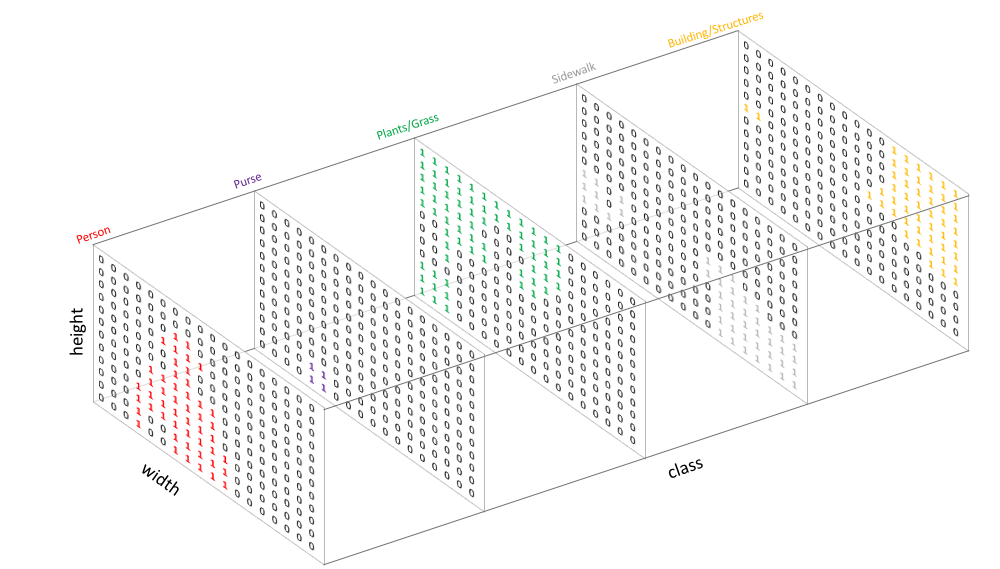

A one-hot encoding of the segmented image is therefore a tensor:

Fig. 5.12 One-hot encoding of semantic labels. Source : https://medium.com/nanonets/how-to-do-image-segmentation-using-deep-learning-c673cc5862ef¶

5.1.2. Fully convolutional networks¶

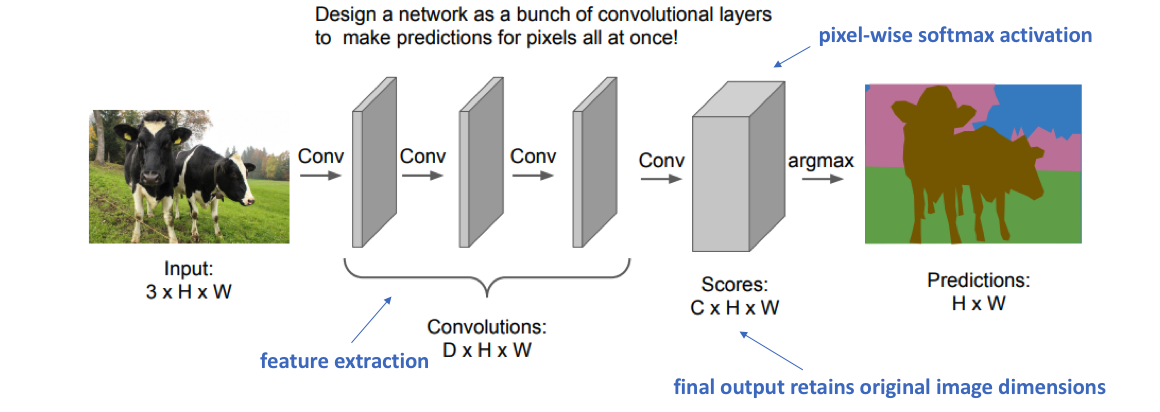

A fully convolutional network only has convolutional layers and learns to predict the output tensor. The last layer has a pixel-wise softmax activation. We minimize the pixel-wise cross-entropy loss

Fig. 5.13 Fully convolutional network. Source : http://cs231n.stanford.edu/slides/2017/cs231n_2017_lecture11.pdf¶

Downside: the image size is preserved throughout the network: computationally expensive. It is therefore difficult to increase the number of features in each convolutional layer.

5.2. SegNet: segmentation network¶

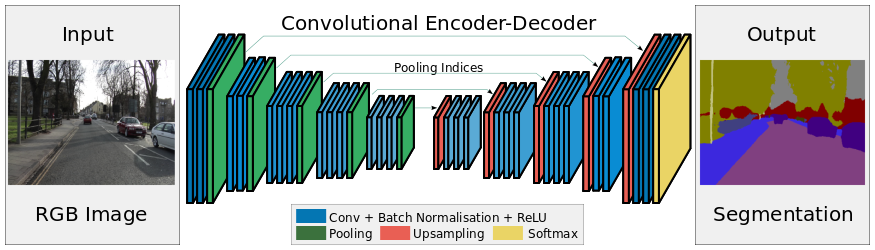

SegNet [Badrinarayanan et al., 2016] has an encoder-decoder architecture, with max-pooling to decrease the spatial resolution while increasing the number of features. But what is the inverse of max-pooling? Upsampling operation.

Fig. 5.14 SegNet [Badrinarayanan et al., 2016].¶

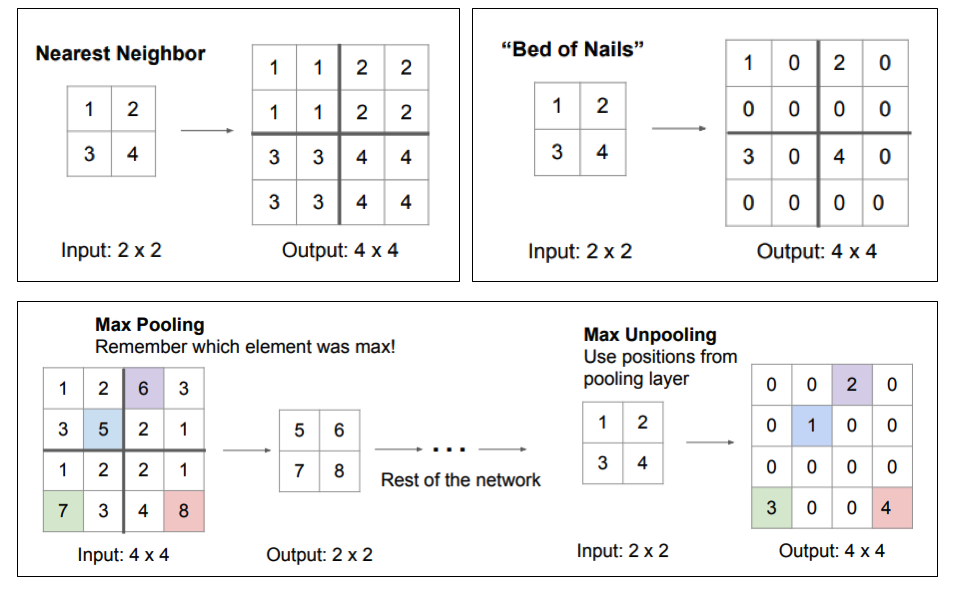

Nearest neighbor and Bed of nails would just make random decisions for the upsampling. In SegNet, max-unpooling uses the information of the corresponding max-pooling layer in the encoder to place pixels adequately.

Fig. 5.15 Upsampling methods. Source : http://cs231n.stanford.edu/slides/2017/cs231n_2017_lecture11.pdf¶

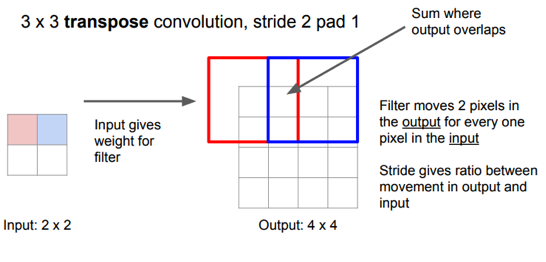

Another popular option in the followers of SegNet is the transposed convolution. The original feature map is upsampled by putting zeros between the values and a learned filter performs a regular convolution to produce an upsampled feature map. This works well when convolutions with stride are used in the encoder, but it is quite expensive computationally.

Fig. 5.16 Transposed convolution. Source : https://github.com/vdumoulin/conv_arithmetic¶

Fig. 5.17 Transposed convolution. Source : http://cs231n.stanford.edu/slides/2017/cs231n_2017_lecture11.pdf¶

5.3. U-Net¶

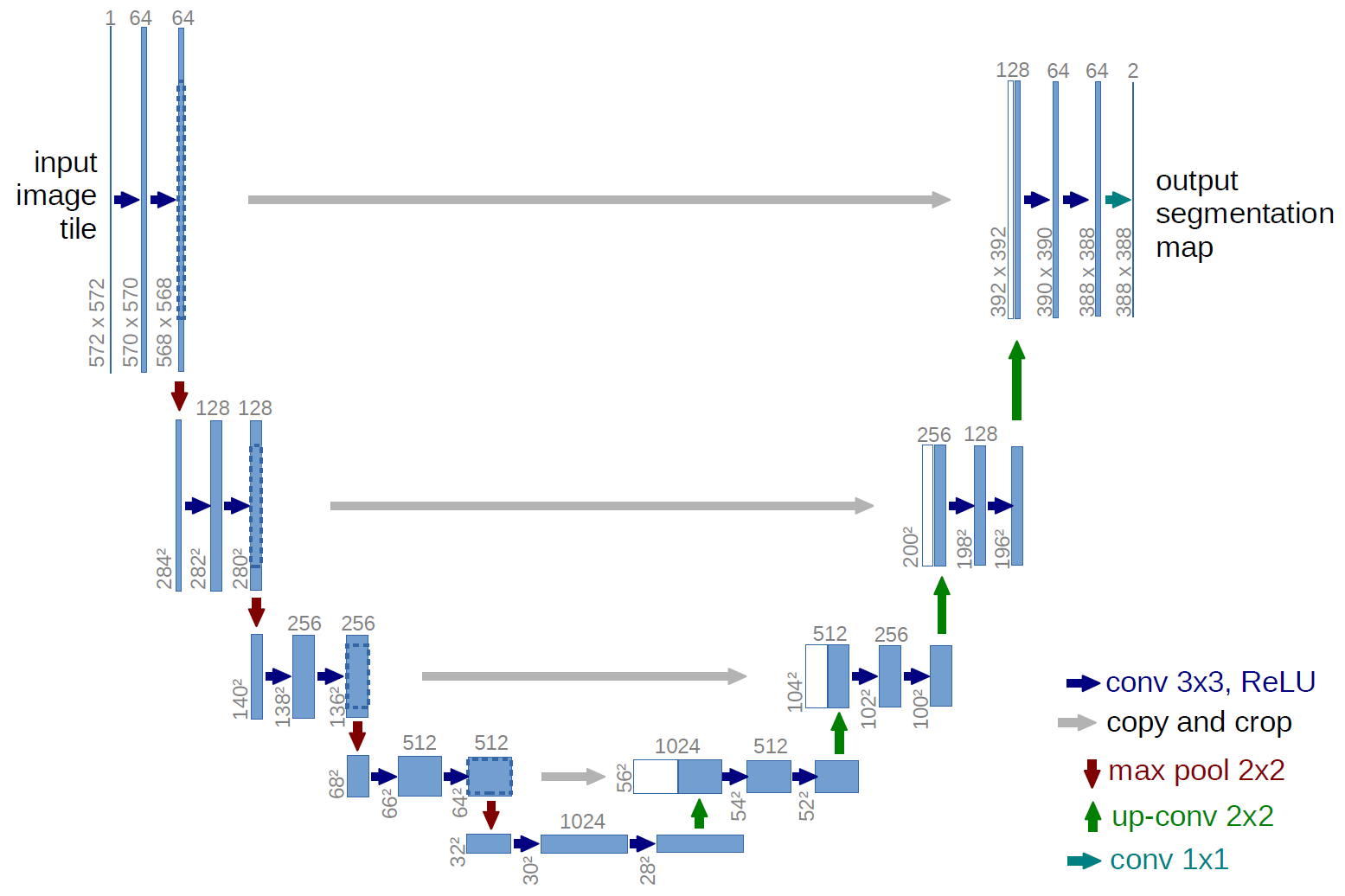

The problem of SegNet is that small details (small scales) are lost because of the max-pooling. the segmentation is not precise. The solution proposed by U-Net [Ronneberger et al., 2015] is to add skip connections (as in ResNet) between different levels of the encoder-decoder. The final segmentation depends both on:

large-scale information computed in the middle of the encoder-decoder.

small-scale information processed in the early layers of the encoder.

Fig. 5.18 U-Net [Ronneberger et al., 2015].¶

5.4. Mask R-CNN¶

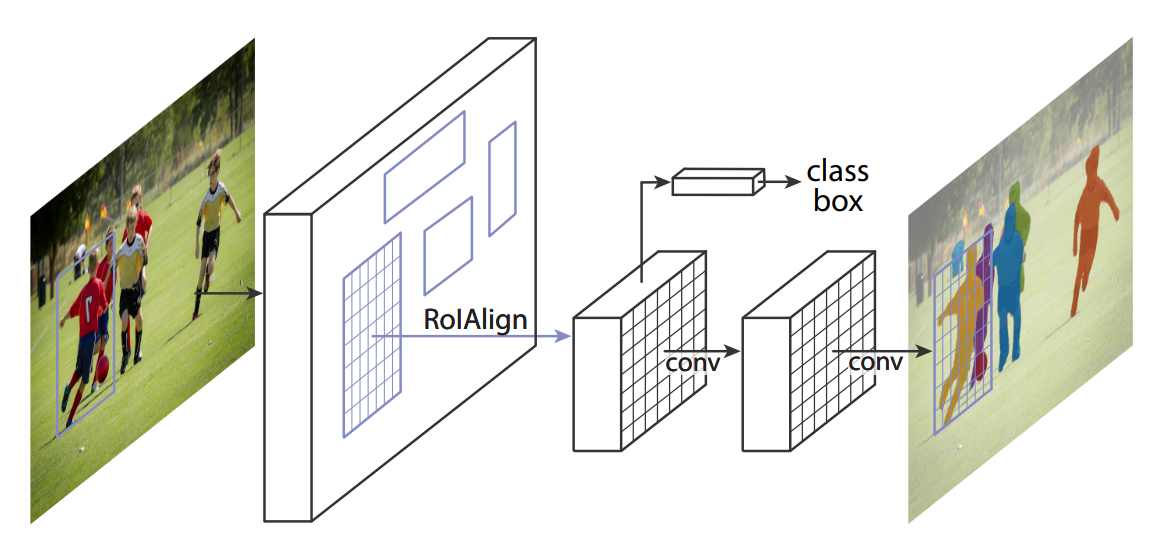

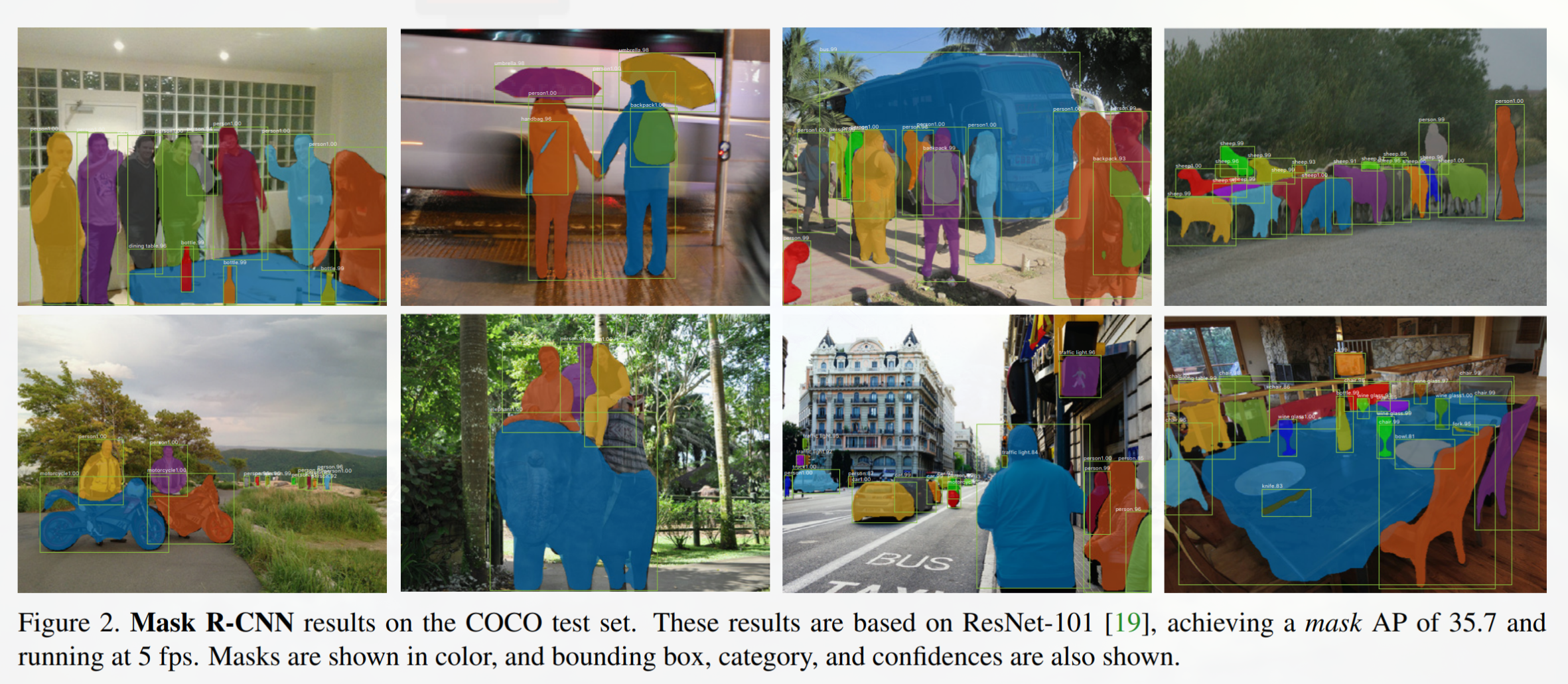

For many applications, segmenting the background is useless. A two-stage approach can save computations. Mask R-CNN [He et al., 2018] uses faster R-CNN to extract bounding boxes around interesting objects, followed by the prediction of a mask to segment the object.

Fig. 5.19 Mask R-CNN [He et al., 2018].¶

Fig. 5.20 Mask R-CNN [He et al., 2018].¶