11.1. Transfer learning¶

The goal of the exercise is to investigate data augmentation and transfer learning on a very small dataset (2000 training images).

The code is based on the keras tutorial:

The data is provided as part of a Google tutorial:

import numpy as np

import matplotlib.pyplot as plt

rng = np.random.default_rng()

import tensorflow as tf

11.1.1. Loading the cats and dogs data¶

The data we will use is a subset of the “Dogs vs. Cats” dataset dataset available on Kaggle, which contains 25,000 images. Here, we use only 1000 dogs and 1000 cats to decrease training time and make the problem harder.

The following cell downloads the data and decompresses it in /tmp/ (it will be erased at the next restart of your computer).

!wget --no-check-certificate \

https://storage.googleapis.com/mledu-datasets/cats_and_dogs_filtered.zip \

-O /tmp/cats_and_dogs_filtered.zip

import os

import zipfile

local_zip = '/tmp/cats_and_dogs_filtered.zip'

zip_ref = zipfile.ZipFile(local_zip, 'r')

zip_ref.extractall('/tmp')

zip_ref.close()

--2020-12-23 11:23:19-- https://storage.googleapis.com/mledu-datasets/cats_and_dogs_filtered.zip

Resolving storage.googleapis.com (storage.googleapis.com)... 172.253.63.128, 142.250.31.128, 172.217.7.176, ...

Connecting to storage.googleapis.com (storage.googleapis.com)|172.253.63.128|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 68606236 (65M) [application/zip]

Saving to: ‘/tmp/cats_and_dogs_filtered.zip’

/tmp/cats_and_dogs_ 100%[===================>] 65.43M 183MB/s in 0.4s

2020-12-23 11:23:20 (183 MB/s) - ‘/tmp/cats_and_dogs_filtered.zip’ saved [68606236/68606236]

All we have is a bunch of *.jpg images organized in a training and validation set, separated by their binary class dog vs. cat:

cats_and_dogs_filtered/

train/

dogs/

dog001.jpg

dog002.jpg

...

cats/

cat001.jpg

cat002.jpg

...

validation/

dogs/

dog001.jpg

dog002.jpg

...

cats/

cat001.jpg

cat002.jpg

...

Feel free to download the data on your computer and have a look at the images directly.

The next cell checks the structure of the image directory:

base_dir = '/tmp/cats_and_dogs_filtered'

train_dir = base_dir + '/train'

validation_dir = base_dir + '/validation'

print('total training cat images:', len(os.listdir(train_dir + '/cats')))

print('total training dog images:', len(os.listdir(train_dir + '/dogs')))

print('total validation cat images:', len(os.listdir(validation_dir + '/cats')))

print('total validation dog images:', len(os.listdir(validation_dir + '/dogs')))

total training cat images: 1000

total training dog images: 1000

total validation cat images: 500

total validation dog images: 500

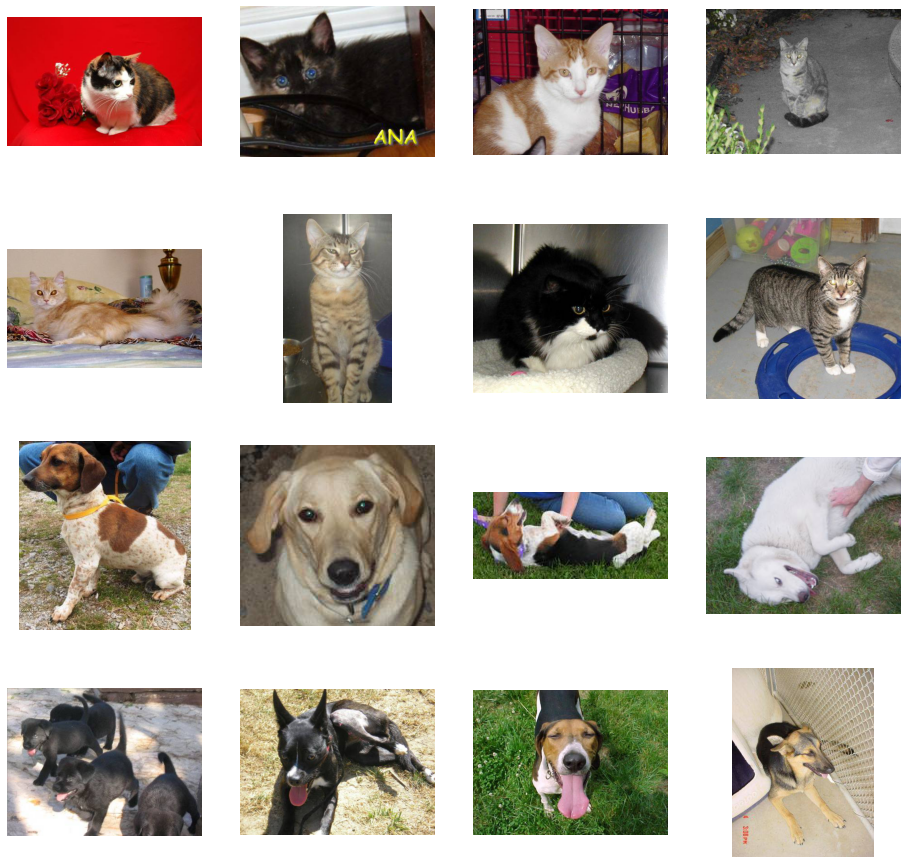

The next cell visualizes some cats and dogs from the training set.

import matplotlib.image as mpimg

fig = plt.figure(figsize=(16, 16))

idx = rng.choice(1000-8, 1)[0]

next_cat_pix = [os.path.join(train_dir + '/cats', fname) for fname in os.listdir(train_dir + '/cats')[idx:idx+8]]

next_dog_pix = [os.path.join(train_dir + '/dogs', fname) for fname in os.listdir(train_dir + '/dogs')[idx:idx+8]]

for i, img_path in enumerate(next_cat_pix+next_dog_pix):

# Set up subplot; subplot indices start at 1

ax = plt.subplot(4, 4, i + 1)

ax.axis('Off') # Don't show axes (or gridlines)

img = mpimg.imread(img_path)

plt.imshow(img)

plt.show()

In order to train a binary classifier on this data, we would need to load the images and transform them into Numpy arrays that can be passed to tensorflow. Fortunately, keras provides an utility to do it automatically: ImageDataGenerator. Doc:

https://keras.io/api/preprocessing/image/

The procedure is to create an ImageDataGenerator instance and to create an iterator with flow_from_directory that will return minibatches on demand when training the neural network. The main advantage of this approach is that you do not need to load the whole dataset in the RAM (not possible for most realistic datasets), but adds an overhead between each minibatch.

datagen = tf.keras.preprocessing.image.ImageDataGenerator(rescale=1./255)

generator = datagen.flow_from_directory(

directory, # This is the source directory for training images

target_size=(150, 150), # All images will be resized to 150x150

batch_size=64,

# Since we use binary_crossentropy loss, we need binary labels

class_mode='binary')

The rescale argument makes sure that the pixels will be represented by float values between 0 and 1, not integers between 0 and 255. Unfortunately, it is not possible (or very hard) to perform mean-removal using this method. The image data generator accepts additional arguments that we will discuss in the section on data augmentation.

directory must be set to the folder containing the images. We ask the generator to resize all images to 150x150 and will use a batch size of 64. As there are only two classes cat and dog, the labels will be binary (0 and 1).

Q: Create two generators train_generator and validation_generator for the training and validation sets respectively.

Q: Sample a minibatch from the training generator by calling next() on it (X, t = train_generator.next()) and display the first image. Call the cell multiple times.

11.1.2. Functional API of Keras¶

In the previous exercises, we used the Sequential API of keras, which stacks layers on top of each other:

model = tf.keras.Sequential()

model.add(tf.keras.layers.Input((28, 28, 1)))

model.add(tf.keras.layers.Conv2D(32, (3, 3), activation='relu')

model.add(tf.keras.layers.MaxPooling2D(pool_size=(2, 2)))

model.add(tf.keras.layers.Flatten())

model.add(tf.keras.layers.Dense(10, activation='softmax'))

In this exercise, we will use the Functional API of keras, which gives much more freedom to the programmer. The main difference is that you can explicitly specify from which layer a layer should take its inputs:

inputs = tf.keras.layers.Input((28, 28, 1))

x = tf.keras.layers.Conv2D(32, (3, 3), activation='relu')(inputs)

x = tf.keras.layers.MaxPooling2D(pool_size=(2, 2))(x)

x = tf.keras.layers.Flatten()(x)

outputs = tf.keras.layers.Dense(10, activation='softmax')(x)

model = tf.keras.Model(inputs, outputs)

This allows to create complex architectures, for examples with several output layers.

Q: Modify your CNN of last exercise so that it is defined with the Functional API and train it on MNIST.

11.1.3. Training a CNN from scratch¶

Let’s now train a randomly-initialized CNN on the dog vs. cat data. You are free to choose any architecture you like, the only requirements are:

The input image must be 150x150x3:

tf.keras.layers.Input(shape=(150, 150, 3))

The output neuron must use the logistic/sigmoid activation function (binary classification:

tf.keras.layers.Dense(1, activation='sigmoid')

The loss function must be

'binary_crossentropy'and the metricbinary_accuracy:

model.compile(loss='binary_crossentropy',

optimizer=optimizer,

metrics=['binary_accuracy'])

There is not a lot of data, so you can safely go deep with your architecture (i.e. with convolutional layers and max-pooling until the image dimensions are around 7x7), especially if you use the GPU on Colab.

To train and validate the network on the generators, just pass them to model.fit():

model.fit(

train_generator,

epochs=20,

validation_data=validation_generator,

callbacks=[history])

Q: Design a CNN and train it on the data for 30 epochs. A final validation accuracy around 72% - 75% is already good, you can then go to the next question.

11.1.4. Data augmentation¶

The 2000 training images will never be enough to train a CNN from scratch without overfitting, no matter how much regularization you use. A first trick that may help is data augmentation, i.e. to artificially create variations of each training image (translation, rotation, scaling, flipping, etc) while preserving the class of the images (a cat stays a cat after rotating the image).

ImageDataGenerator allows to automatically apply various transformations when retrieving a minibatch (beware, it can be slow).

datagen = tf.keras.preprocessing.image.ImageDataGenerator(

rescale=1./255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest'

)

Refer the doc for the meaning of the parameters.

To investigate the transformations, let’s apply them on a single image, for example the first cat of the training set:

img = tf.keras.preprocessing.image.load_img('/tmp/cats_and_dogs_filtered/train/cats/cat.0.jpg')

img = tf.keras.preprocessing.image.img_to_array(img)

img = img.reshape((1,) + img.shape)

We can pass this image to the data generator and retrieve minibatches of augmented images:

generator = datagen.flow(img, batch_size=1)

augmented = generator.next()

Q: Display various augmented images. Vary the parameters individually by setting all but one to their default value in order to understand their effect.

Q: Create an augmented training set using the parameters defined in the previous question (feel free to experiment, but that can cost time). Leave the validation generator without data augmentation (only rescale=1./255). Train the exact same network as before on this augmented data. What happens? You may need to train much longer in order to see the effect.

11.1.5. Transfer learning¶

Data augmentation helps randomly initialized to learn from small datasets, but the best solution is to start training with already good weights.

Transfer learning allows to reuse the weights of a CNN trained on a bigger dataset (e.g. ImageNet) to either extract features for a shallow classifier or to allow fine-tuning of all weights.

Keras provides a considerable number of pre-trained CNNs:

https://keras.io/api/applications/

In this exercise, we will use the Xception network for feature extraction, but feel free to experiment with other architectures. To download the weights and create the keras model, simply call:

xception = tf.keras.applications.Xception(

weights="imagenet", # Load weights pre-trained on ImageNet.

input_shape=(150, 150, 3), # Input shape

include_top=False, # Only the convolutional layers, not the last fully-connected ones

)

include_top=False removes the last fully-connected layers used to predict the ImageNet classes, as we only care about the binary cat/dog classification.

Q: Download Xception and print its summary. Make sense of the various layers (the paper might help: http://arxiv.org/abs/1610.02357). What is the size of the final tensor?

Let’s now use transfer learning using this network. The first thing to do is to freeze Xception to make sure that it does learn from the cats and dogs data:

xception.trainable = False

We can then connect Xception to the inputs, making sure again that the network won’t learn (in particular, the parameters of batch normalization are kept):

inputs = tf.keras.Input(shape=(150, 150, 3))

x = xception(inputs, training=False)

We can now use the layer x and stack what we want on top of it. Instead of flattening the 5x5x2048 tensor, it is usually better to apply average-pooling (or mean-pooling) on each 5x5 feature map to obtain a vector with 2048 elements:

x = tf.keras.layers.GlobalAveragePooling2D()(x)

Q: Perform a soft linear classification on this vector with 2048 elements to recognize cats from dogs (using non-augmented data). Do not hesitate to use some dropout and to boost your learning rate, there are only 2049 trainable parameters. Conclude.